SUMMARY

This is AI generated summarization, which may have errors. For context, always refer to the full article.

As information warfare becomes more common, agents of various governments are manipulating social media – and therefore people’s thinking, political actions and democracy. Regular people need to know a lot more about what information warriors are doing and how they exert their influence.

One group, a Russian government-sponsored troll farm called the Internet Research Agency, was the subject of a federal indictment issued in February, stemming from Special Counsel Robert Mueller’s investigation into Russian activities aimed at influencing the 2016 U.S. presidential election.

Our recent study of that group’s activities reveals that there are some behaviors that might help identify propaganda-spewing trolls and tell them apart from regular internet users.

Targeted tweeting

We looked at 27,291 tweets posted by 1,024 Twitter accounts controlled by the Internet Research Agency, based on a list released by congressional investigators. We found that these Russian government troll farms were focused on tweeting about specific world events like the Charlottesville protests, specific organizations like ISIS and political topics related to Donald Trump and Hillary Clinton.

That finding fits with other research showing that Internet Research Agency trolls infiltrated and exerted influence in online communitieswith both left- and right-leaning political views. That helped them muddy the waters on both sides, stirring discord across the political spectrum.

Distinctive behavior

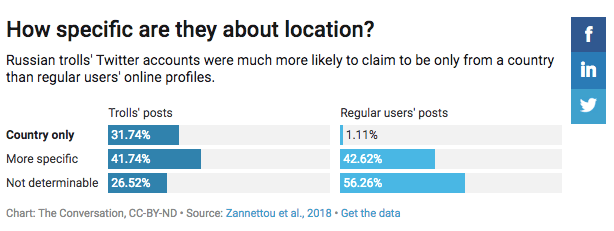

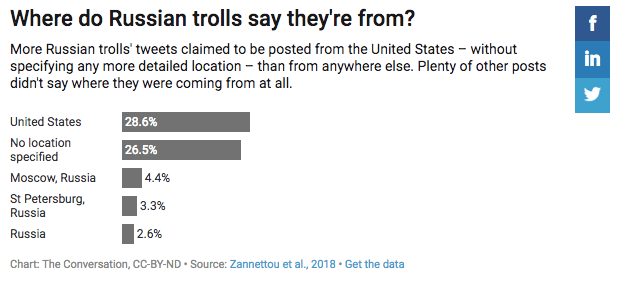

We also found that these troll-farm accounts behaved differently from regular people online. For example, when declaring their locations, they listed a country, but not any particular city in that country. That’s unusual: Most Twitter users tend to be more specific, listing a state or town, as we found when we sampled 1,024 Twitter accounts at random. The most common location designation for Russian troll accounts was “U.S.,” followed by “Moscow,” “St. Petersburg,” and “Russia.”

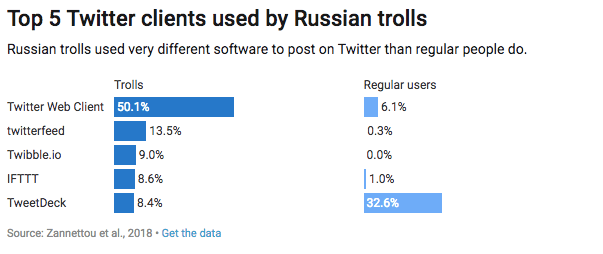

In addition, the troll accounts were more likely to tweet using Twitter’s own website on a desktop computer – labeled in tweets as “Twitter Web Client.” By contrast, we found regular Twitter users are much more likely to use Twitter’s mobile apps for iPhone or Android, or specialized apps for managing social media, like TweetDeck.

Working many angles

Looking at the Internet Research Agency accounts over 21 months, between January 2016 and September 2017, we found that they frequently reset their online personas by changing account information like their name and description and by mass-deleting past tweets. In this way, the same account – still retaining its followers – could be repurposed to advocate a different position or target a different demographic of users.

For instance, on May 15, 2016, the troll account with the Twitter ID number 4224912857 was calling itself “Pen_Air” with a profile description reading “National American news.” This particular troll account tweeted 326 times, while its followers rose from 1,296 to 4,308 between May 15, 2016, and July 22, 2016.

But as the U.S. presidential elections approached, it changed: On September 8, 2016, the account changed its name to “Blacks4DTrump” and its profile description to “African-Americans stand with Trump to make America Great Again!” Over the next 11 months, it tweeted nearly 600 times – far more often than its previous identity had. This activity no doubt helped increase the account’s follower count to nearly 9,000.

The activity didn’t stop after the election. Around August 18, 2017, the account was repurposed again. Almost all of its previous tweets were deleted – leaving just 35. And its name became “southlonestar2,” with a description as “Proud American and TEXAN patriot! Stop ISLAM and PC. Don’t mess with Texas.”

In all three incarnations the account’s tweets focused on right-wing political topics, using hashtags like #NObama and #NeverHillary and retweeting other troll accounts, like TEN_GOP and tpartynews.

These troll accounts also often tweeted links to posts from Russian government-sponsored organizations purporting to be news.

Fighting trolling

Though our research focused on Twitter, the Internet Research Agency didn’t. It even expanded beyond Facebook: In early 2018, Reddit announced that Russian trolls had likely operated on its site as well. That report highlights the fact that the companies hosting social media and online discussion sites are the best informed about what’s happening on their systems. As a result, in our view, the platforms’ companies should provide technical solutions, analyzing activity and taking action to safeguard users from secret influence campaigns from government agents.

Yet even if the large platforms like Twitter, Facebook, and Reddit were somehow able to completely eradicate trolling by professional government agents, there are many smaller communities online that may remain vulnerable. Some of our previous work has shown how ideas that first emerge on fringe sites like 4chan can rapidly make it to mainstream discussions online and in the real world.

Russian trolls could take advantage of that tendency to infiltrate these smaller sites, like Gab or Minds, influencing real people who also use those systems – and getting them to spread propaganda and disinformation more widely.

It’s clear to us that technological solutions on their own cannot solve the problem of government-sponsored trolling online. The trolls’ efforts take advantage of weaknesses in society; the only fix for that is for people as individuals and collectively to think more critically about online information, especially before sharing it. – Rappler.com

This article originally appeared in TheConversation.com

Savvas Zannettou receives funding from EU project “ENCASE” Grant Agreement number 691025. Jeremy Blackburn does not work for, consult, own shares in or receive funding from any company or organization that would benefit from this article, and has disclosed no relevant affiliations beyond their academic appointment.

Add a comment

How does this make you feel?

There are no comments yet. Add your comment to start the conversation.