SUMMARY

This is AI generated summarization, which may have errors. For context, always refer to the full article.

From helping in the global fight against Covid-19 to driving cars and writing classical symphonies, artificial intelligence is rapidly reshaping the world we live in. But not everyone is comfortable with this new reality. The billionaire tech entrepreneur Elon Musk has referred to AI as the “biggest existential threat” of our time. With recent scientific studies testing the technology’s ability to evolve on its own, every step in its development throws up new concerns as to who is in control and how it will affect the lives of ordinary people.

Here are 9 important milestones in the history of AI and the ethical concerns that have long loomed over the field.

Ancient roots and fairy tales

The ancient world had many tales of intelligent mechanical beings, with relatable personalities and extraordinary skills. In Greek mythology dating back to 700 BC, Hephaestus, god of technology, crafts a giant from bronze, gives him a soul and names him Talos. Chinese writings from the 3rd century BC include the story of Yan Shi, an inventor who presents a king with a mechanical man who can walk and sing “perfect in tone.”

But even these early stories addressed concerns about the absence of morality in non-human intelligence. In the late 19th century, Italian author Carlo Collodi introduced children to Pinocchio, a wooden puppet who comes to life and dreams of being a real boy. From the moment of his creation, Pinocchio struggles to obey authority and conform to society, causing chaos along the way. While Walt Disney’s 1940 animated movie of the story had a happy ending, many aspects of Collodi’s original version anticipate contemporary fears about AI.

Mathematicians improve on fairy tale, 17th century

Machine learning relies on memorizing patterns, in order to simulate human actions or thought. Back in the 17th century, thinkers like Gottfried Wilhelm von Leibniz sought to represent human cognition in computational terms. In 1673 Leibniz built the Step Reckoner, a machine that could not just add and subtract, but also multiply and divide, by the turning of a hand crank that rotated a series of drums.

Further advances in algebra began to provide the mathematical language to express a much wider range of ideas and open up vast new possibilities for “thinking” machines. But a tension also emerged that still exists in AI innovation today: to what extent can the morality of right and wrong be represented as precise mathematical formulas?

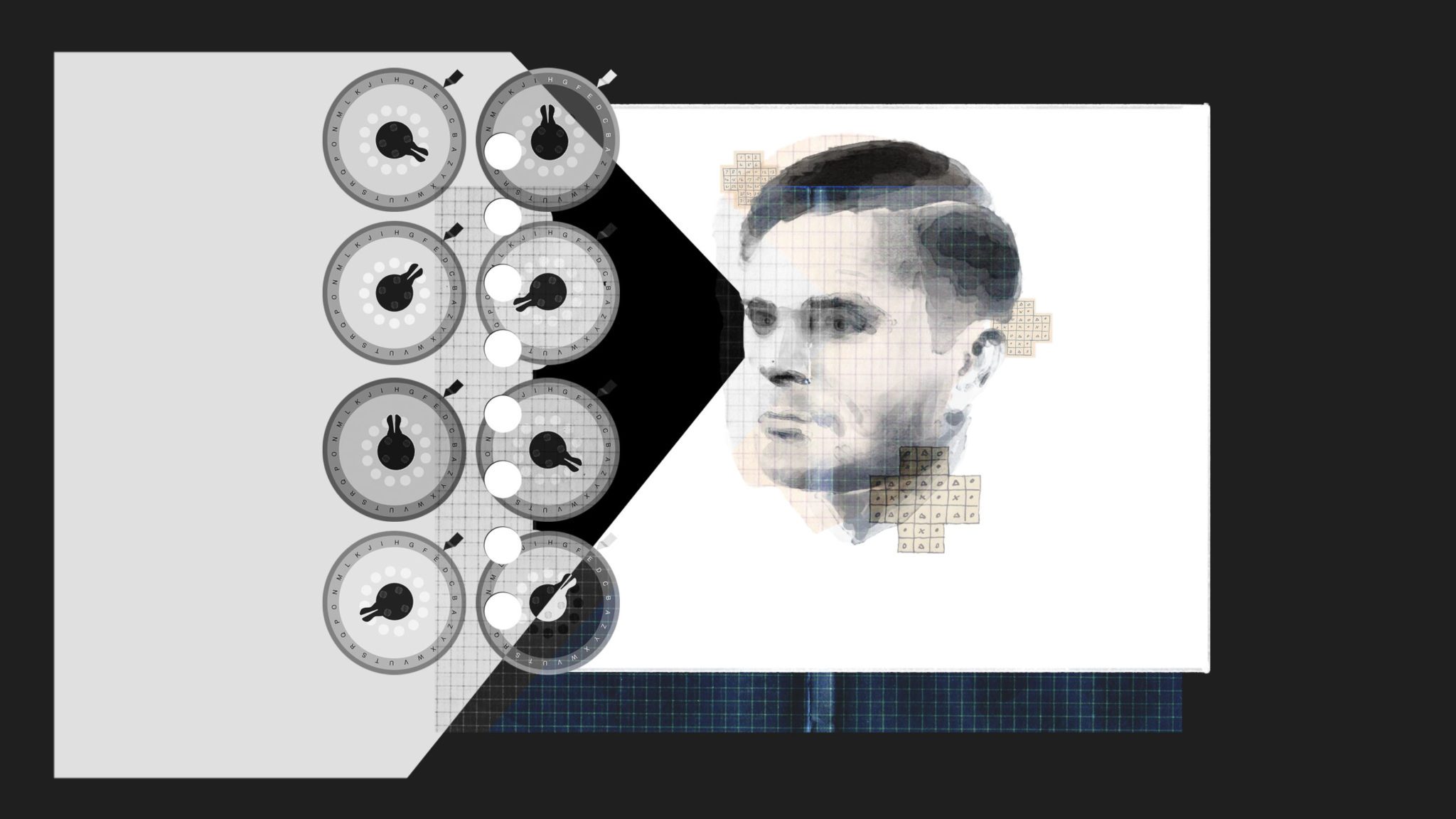

Alan Turing, 1912-1954

The term “artificial intelligence” first entered the lexicon two years after Alan Turing’s death, but the work of this groundbreaking British mathematician paved the way for great leaps in the field. Best known for his work cracking the Enigma code used by German military command to send messages during World War II, Turing laid the foundations of computer science and formalized the idea of the algorithm. As early as 1947, he spoke publicly about a “machine that can learn from experience.” His 1950 method of determining whether a computer is capable of thinking like a human being — known as the Turing Test — is still in use by AI developers today.

Dartmouth Conference, 1956

When we talk about contemporary iterations of “artificial intelligence,” we are using words coined by John McCarthy, a 28-year-old professor at Dartmouth College in 1956. The term originated from a conference on machine learning organized by McCarthy and other professors at Dartmouth College. The men had originally planned for a few other researchers to attend, but instead dozens arrived from various fields of science, showing both excitement and real potential for the field.

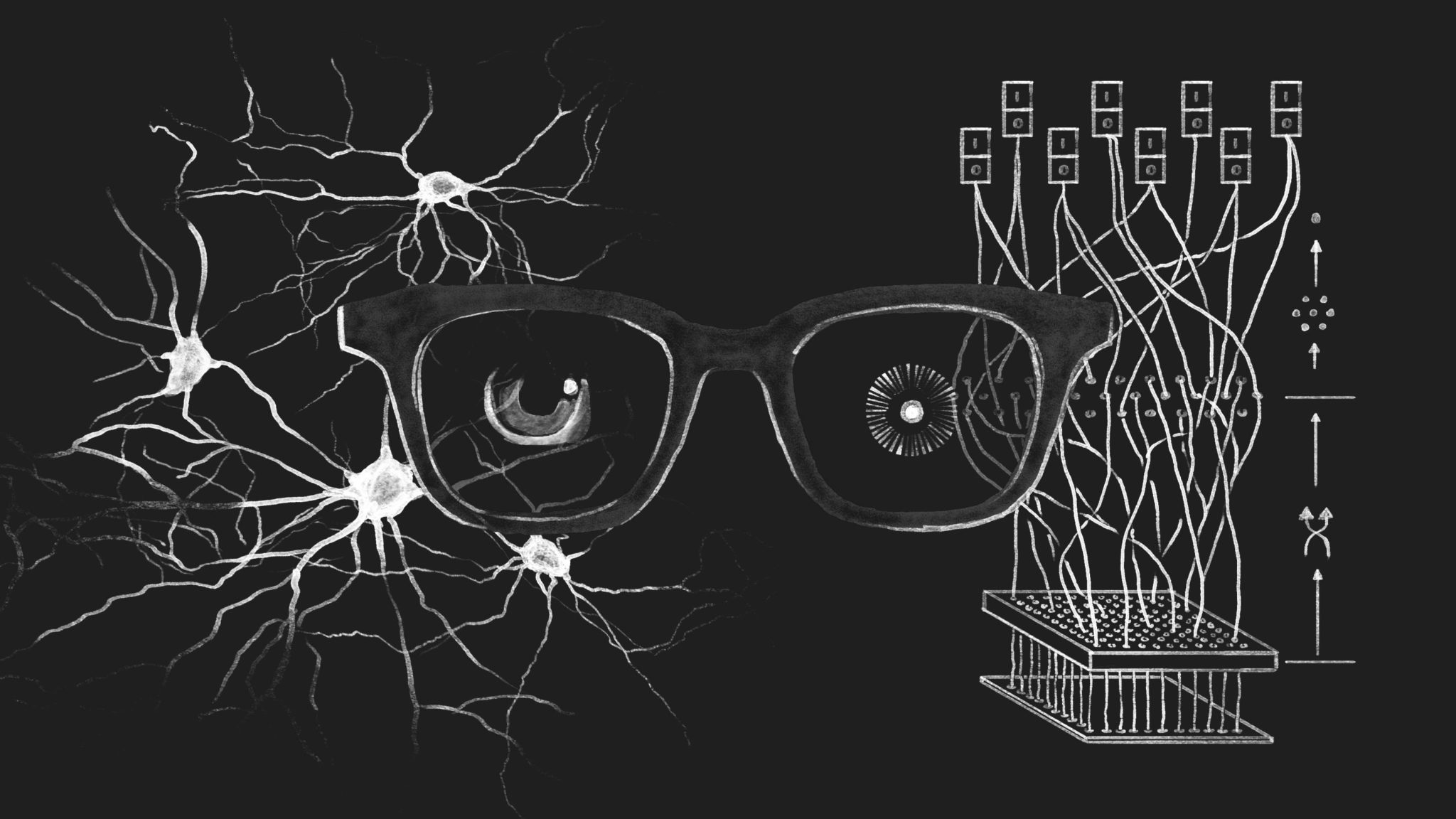

Godfather of artificial intelligence: Frank Rosenblatt, 1928-1971

Mathematicians weren’t the only ones interested in artificial intelligence at this time. Frank Rosenblatt was a research psychologist at the Cornell Aeronautical Laboratory and a pioneer in the use of biology to inspire research in AI. His work led him to create the perceptron in 1958, an electronic device designed to mimic neural networks found in the human brain and enable pattern recognition.

The perceptron was first simulated on an early IBM computer by Rosenblatt and was later developed by the U.S. Navy. The New York Times described the technology as “the embryo of an electronic computer” that was expected to “be able to walk, talk, see, write, reproduce itself and be conscious of its existence.”

20th-century science-fiction

No account of the history of AI should ignore the role played by the arts in illustrating how future worlds might function. From the very beginning, fantasy has been integral to the development of artificial intelligence. Real-life technology has also inspired an entire genre of science-fiction novels and movies. Authors and filmmakers from Isaac Asimov to Ridley Scott have agonized over what machine learning might unleash and what it means for humanity. While artificial intelligence is already being used in some areas of statistics-heavy journalism, experiments in fiction writing have also been made. In 2016, Ross Goodwin, an AI researcher at New York University teamed up with director Oscar Sharp to create a bizarre, machine-written film.

Some of the most informed depictions of AI in 20th Century literature and on screen include:

- Metropolis, 1927

- A Space Odyssey, 1968, 2001

- Robot series, Issac Asimov, 1950

- Westworld, 1973

- Golem XIV, Stanislaw Lem, 1981

- Blade Runner, 1982

- Ghost in the Shell, 1995

- The Matrix, 1999

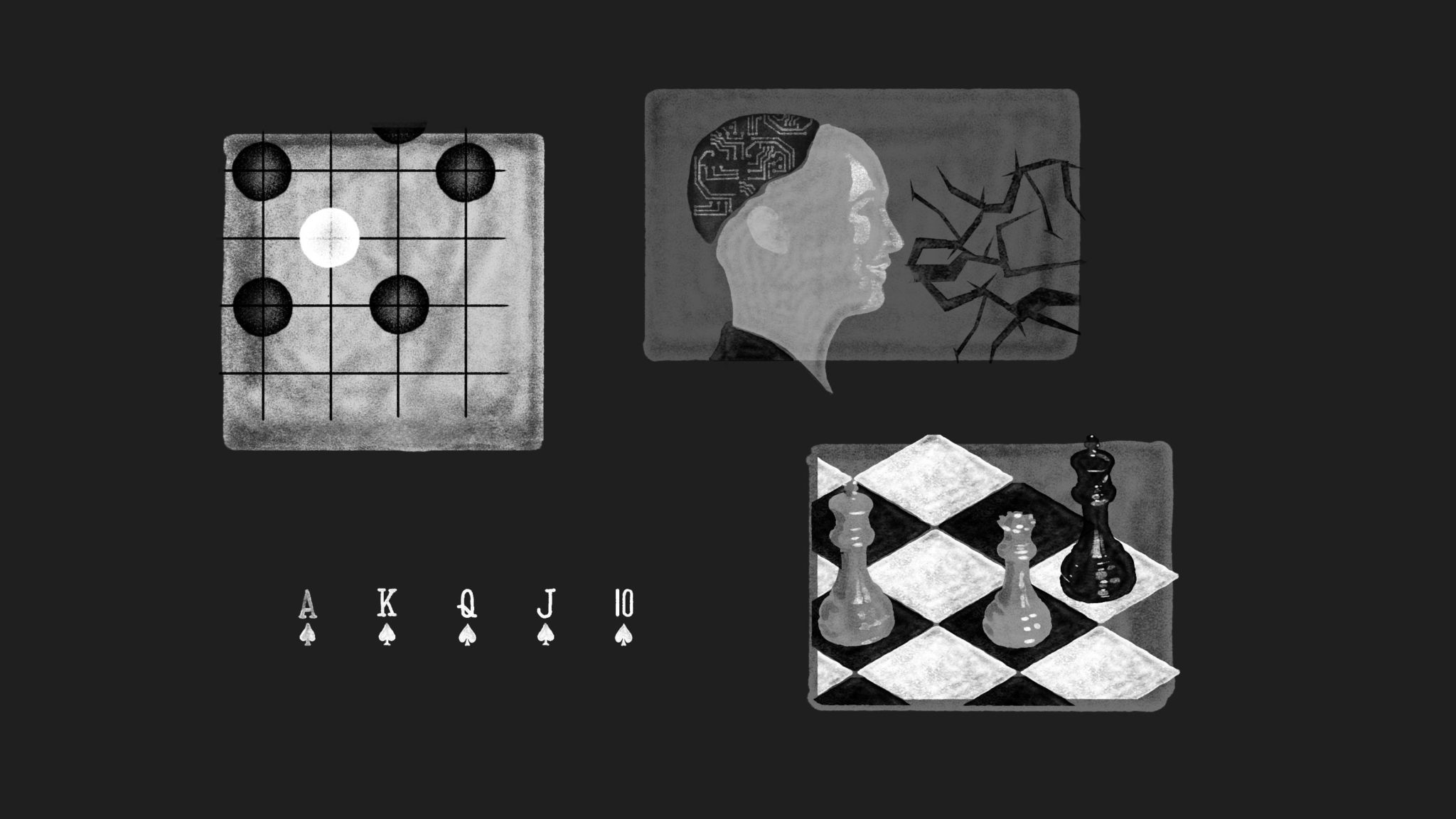

Public triumphs of AI

In the last two decades, there have been a number of high-profile demonstrations of AI’s superiority over mere mortals. In 1997, IBM’s Deep Blue beat world champion Garry Kasparov at chess, becoming the first supercomputer to defeat a reigning world champion. Another milestone was reached in 2011, when a computer system named Watson won $1 million on the U.S. TV game show “Jeopardy.” Then, in 2015, Google’s AlphaGo technology trounced Fan Hui — Europe’s top human player — at the ancient Chinese board game Go.

However, things don’t always run so smoothly. Take, for instance, the time in 2016 when a lifelike robot named Sophia declared she would “destroy humans” during a demonstration at the South by Southwest conference. The statement was in response to what was apparently a joke from Sophia’s creator David Hanson.

AI moves to the city

National and local governments around the world have incorporated AI into systems designed to manage and streamline city infrastructure and services. There are currently just over 1,000 smart city projects in countries including China, Brazil and Saudi Arabia, according to research from the British financial firm Deloitte. This is where the technology is leaving its biggest footprint on our world.

From security cameras and traffic control systems to the data collected by free wireless internet networks, our daily movements and behaviors are all increasingly processed, analyzed and mined for data. Millions of personal electronic devices like smartphones and laptops are connected to the internet, generating massive amounts of information, which is of great value to both governments and corporations. From Xinjiang to Moscow, smart city technology is becoming a key tool for authoritarian governments to strengthen their grip on power.

According to Fan Yan, a privacy expert from Deakin University in Australia, such systems rely on “citizen’s data collected in real time from all walks of their life” and “not just in the context of China.”

Fear and innovation

Recent innovations in artificial intelligence have left almost no area of contemporary life and work untouched. Many of our homes are now powered by “smart” devices like Amazon’s Alexa and Google Nest. AI has also unleashed massive changes in medicine, agriculture and finance. Many of these examples have been positive, but the drawbacks are also increasingly apparent as governments and workers worry about how AI processes focused on efficiency could lead to massive job losses. In 2019, IBM reported that 120 million workers around the world will need retraining in the next three years, while Fortune magazine wrote that about 38% of location-based jobs will become automated in the next decade.

In 2015, the late British physicist Stephen Hawking stated that the technology is already so advanced that computers will overtake humans “at some point within the next 100 years.” His prediction was meant as a warning. “We need to make sure the computers have goals aligned with ours,” he said. – Rappler.com

Katia Patin is a multimedia editor at Coda Story.

This article has been republished from Coda Story with permission.

Add a comment

How does this make you feel?

There are no comments yet. Add your comment to start the conversation.