SUMMARY

This is AI generated summarization, which may have errors. For context, always refer to the full article.

“Your account has been suspended.”

“You cannot post or comment for 3 days”

“You can’t go live for 63 days”

For Afghan journalist Shafi Karimi’s, the list of restrictions that Facebook has imposed on him goes on and on. “I am blocked and I am losing an audience, and people are losing vital information,” says Karimi, who is covering Afghanistan from exile in France.

He is not the only one. From Afghanistan to Ukraine, and much of the rest of the non-English speaking world, journalists are losing their voice. Not only because of the increasingly oppressive governments that target them, but also because policies created in Silicon Valley are helping oppressors of free speech peddle disinformation.

Over the past month, Karimi has sent numerous appeals to Facebook, but has gotten no reply. And so last week, Karimi pushed his way through a champagne-sipping crowd of journalists and media representatives at a reception that Meta, Facebook’s parent company, threw at the International Journalism Festival in Perugia, Italy.

The festival is one of the industry’s key annual events and a rare opportunity for journalists like Karimi to speak to big tech company representatives directly.

Karimi found a Meta staff member and, shouting over the crowd, tried to explain to him how all independent voices on Afghanistan are being affected by Facebook’s poorly thought-out policy that seems to indiscriminately label all mentions of the Taliban as hate speech and then summarily remove them. He explained that Facebook is an essential platform for people stuck in the Taliban-imposed information vacuum and that blocking those voices benefits first and foremost the Taliban itself. The Meta representative listened and asked Karimi to follow up. Karimi did – twice – but never heard back.

As Karimi was pleading with one Meta employee, I cornered another one across the crowded reception hall, to deliver a similar message from a different part of the world. A friend working for an independent television station in Georgia had asked me to pass on that her newsroom had lost a staggering 90% of its Facebook audience since they began covering the war in Ukraine. The station, called Formula TV, made countless attempts to contact Meta but received no response.

Was there anything these Meta staffers could do to help?

‘Facebook pages of real, independent journalism are dying’

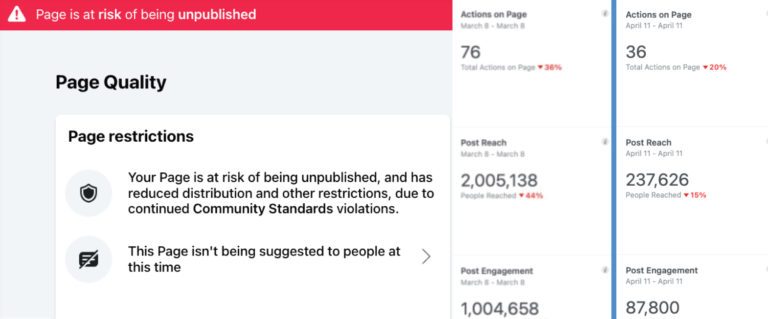

This seemingly technical error caused the station to lose 90% of its audience, but also a chunk of its revenue. “Monetization has been suspended. It is a harsh punishment,” Ugulava said.

The Tbilisi-based opposition TV station Mtavari saw similar declines when it ran a story about Azov Battalion, a Ukrainian volunteer paramilitary group that has been defending Mariupol, where some of the worst atrocities of the war have occurred. Facebook removed the story on grounds that it was “a call to terrorism.” The Azov Battalion is controversial because of the far-right and even neo-Nazi leanings of many of its members, but only Russia categorizes it as a terrorist organization.

“We were already under constant attacks from Georgian government troll farms, but since the invasion Russian-backed organizations began reporting us too. Azov incident was one of many,” Nika Gvaramia, the channel’s Director General told me.

Gvaramia says many of the Ukraine-related stories that Mtavari posted in early March were taken down by Facebook in early April, weeks after they were first published and seen by millions of people. The Mtvari team has good reason to believe they are being reported by Kremlin-backed accounts.

“The worst part is that there is no warning mechanism, there is no obvious criteria for these takedowns and appeals to Facebook take weeks … Facebook pages of real, independent journalism are dying,” Gvaramia said. His channel’s engagement, he said, dropped from 22 million in early March to 6 million in early April.

For a place as small as Georgia (population 3.5 million) the consequences of these takedowns are huge. Like in many countries outside the West, Facebook has become the nation’s virtual public square – the place where people gather to discuss and debate their future. This discussion is existential in Georgia, because the future is so precarious: twenty percent of the country is already occupied by Russia, and many fear that Ukraine’s invasion will push Georgia further into Moscow’s embrace. Kremlin-funded disinformation campaigns have put independent media covering the situation under massive pressure. When media outlets like Formula TV and Mtavari disappear from people’s Facebook feeds, the very ideas of liberal democracy disappear from the public debate.

“The power that Facebook has is scary. The way it is using it is even scarier,” a Russian journalist, who did not want to be named due to security concerns, told me. Her account was suspended after she was reported to Facebook by numerous accounts accusing her of violating community standards. She suspects the accounts that reported her were working on behalf of the Russian government. Like Karimi, who says Facebook is helping the Taliban, she says Facebook’s policies are aiding Vladimir Putin’s agenda.

“Silicon Valley is helping Putin to win the information war. It is insane and it has to stop,” she said “But we don’t know how to tell them this, because it is impossible to speak to them directly.”

None of this is new

Meta has been accused of promoting hatred and disinformation around the world before, from Nigeria to Palestine to Myanmar where the company was famously accused of fueling the genocide of Rohingya Muslims. And experts in regions like the Middle East and Africa have noted that even though tech platforms are failing to adequately address the content crisis around Ukraine, they have brought a faster and more robust public response in this case than in places like Syria or Ethiopia.

With each new crisis, Meta has made new promises to better account for all the cultural and linguistic nuances of posts around the world. The company even put out an earnest-sounding human rights policy last year that focused on these issues. But there’s little evidence that its practices are actually changing. Facebook does not disclose how many moderators it employs, but estimates suggest around 15,000 people are charged with vetting content generated by Facebook’s nearly 3 billion users globally.

“It is like putting a beach shack in the way of a massive tsunami and expecting it to be a barrier,” one moderator told me. I cannot name him, or say where he is, because Facebook subcontractors operate under strict non-disclosure agreements. But he and other people with access to moderators told me that the Ukraine war is the latest proof that Facebook’s content moderation model does more harm than good.

Facebook moderators have 90 seconds to decide whether a post is allowed to go up or not. From Myanmar to Ukraine and beyond, they are dealing with incredibly graphic images of violence or highly contextual speech that typically doesn’t line up with Facebook’s byzantine rules on what is and is not allowed. The system, in which posts live or die depending on a quick decision of an overworked, underpaid and often traumatized human, takes a toll on the mental health of the moderators. But it is also damaging the health of the information ecosystem in which we live.

“The weight of this war is falling on outsourced moderators, who have repeatedly sounded the alarm,” says Martha Dark, director of Foxglove Legal, a UK-based tech justice non-profit group that is working on issues of mistreatment of Facebook content moderators around the world.

“Despite its size and its colossal profits, Ukraine has shown that Facebook’s systems are totally unequipped to deal with all-out information war,” says Dark. “No one is saying moderating a war zone is an easy task. But it’s hard to shake the sense that Facebook isn’t making a serious effort at scaling up and fixing content moderation – because to do so would eat into its profit margins. That’s just not good enough,” Dark said.

Facebook has pledged to reply to my questions about the cases of Shafi Karimi from Afghanistan and FormulaTV in Georgia in the coming days – I’ll report on it as soon as I hear back. In the meantime, my contacts there offered this response, which is attributed to a “Meta spokesperson”:

“We’re taking significant steps to fight the spread of misinformation on our services related to the war in Ukraine. We’ve expanded our third-party fact-checking capacity in Russian and Ukrainian, to debunk more false claims. When they rate something as false, we move this content lower in Feed so fewer people see it, and attach warning labels. We also have teams working around the clock to remove content that violates our policies. This includes native Ukrainian speakers to help us review potentially violating Ukrainian content. In the EU, we’ve restricted access to RT and Sputnik. Globally we are showing content from all Russian state-controlled media lower in Feed and adding labels from any post on Facebook that contains links to their websites, so people know before they click or share them.”

It’s true that the company has deplatformed some of the most prominent sources of Russian disinformation, such as Russian state broadcasters. And we can’t know what impact some of these other measures are having, due to the company’s lack of transparency about its actual day-to-day content moderation decisions. But the real power of Facebook, which is arguably the most potent communication tool in the world at the moment, lies in organic, peer-to-peer shares and that’s where so much disinformation flourishes.

“We can no longer cover the war,” says the Georgian journalist Salome Ugulava “Our followers are not seeing us on their feeds.”

It’s not just Facebook: Twitter is facing similar accusations of doing a terrible job policing its platform when it comes to Ukraine. “You are failing,” tweeted journalist Simon Ostrovsky who is covering Ukraine for PBS Newshour. “Hundreds of sock puppet accounts attack every tweet that counters the Kremlin narrative, meanwhile you fall for coordinated campaigns to suspend genuine accounts.”

This story originally ran in Coda Story’s weekly Disinfo Matters newsletter that looks beyond fake news to examine how manipulation of narratives, rewriting of history and altering our memories is reshaping our world. We are currently tracking the war in Ukraine. Sign up here.

This article has been republished from Coda Story with permission.

Add a comment

How does this make you feel?

There are no comments yet. Add your comment to start the conversation.