SUMMARY

This is AI generated summarization, which may have errors. For context, always refer to the full article.

SAN FRANCISCO, USA – Facebook said on Thursday it will stop flagging fake news as “disputed,” opting instead to offer up contradictory stories containing facts that have been checked.

The change comes as the leading online social network strives to stymie use of its platform to spread bogus information.

Lessons learned thus far in the effort to combat fake news include the fact that dispelling misinformation is challenging, especially if it involves what people are already determined to believe, said Facebook experts Jeff Smith, a product designer, user experience researcher Grace Jackson and content strategist Seetha Raj, in an online post.

“Just because something is marked as ‘false’ or ‘disputed’ doesn’t necessarily mean we will be able to change someone’s opinion about its accuracy,” they said.

“In fact, some research suggests that strong language or visualizations (like a bright red flag) can backfire and further entrench someone’s beliefs.”

Drawbacks to “disputed” flags include requiring people to click through in pursuit of further information, and needing more fact-checkers than are sometimes available, according to Facebook.

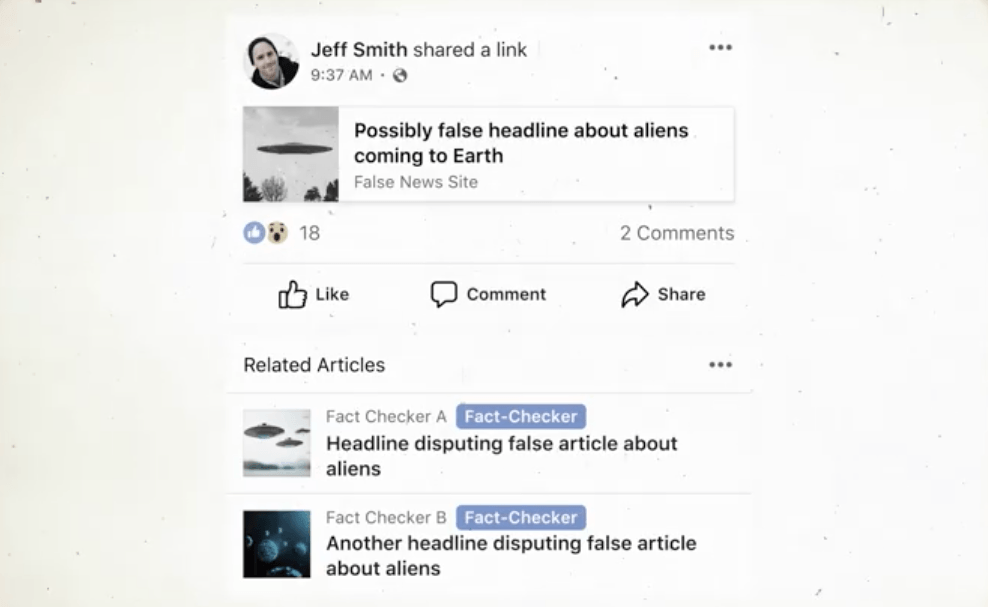

It was found to be more effective to offer fact-checked articles on the same subject before someone clicked a link to a story considered dubious. Here’s how it looks like according to the Facebook video posted above:

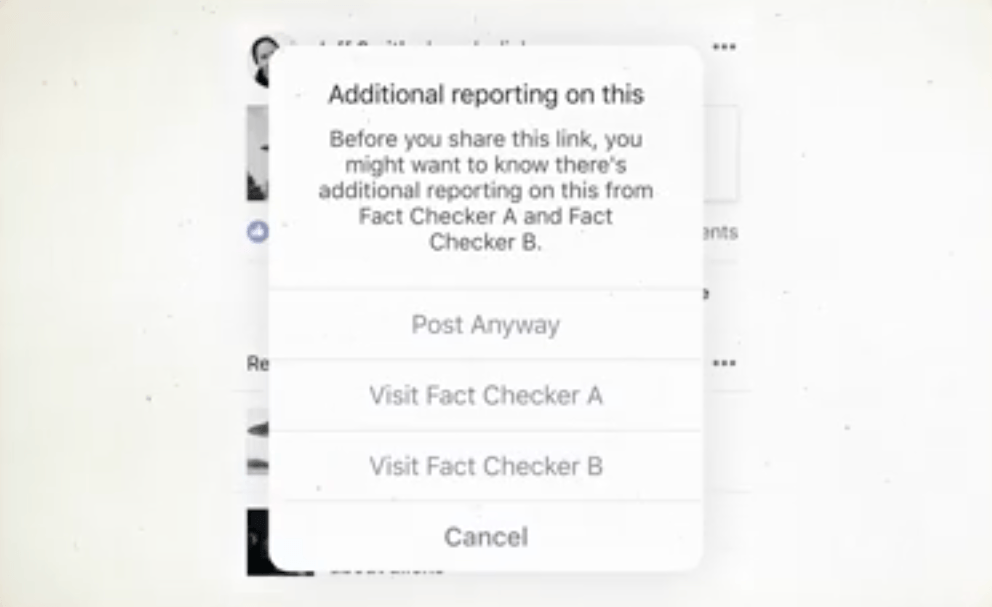

When a user tries to share a disputed story, a prompt appears suggesting other fact-checked links:

In the same video, Facebook said that it takes about 3 days for fact-checkers to verify or debunk a disputed story. The process has to be faster, Facebook said, and announced that they are looking for ways to speed things up, but didn’t expound on specifics.

Facebook began testing that approach in August.

While click-through rates on hoax articles did not change significantly, Facebook found that they were shared less often on the social network than was the case when “disputed” flags were displayed, according to the blog post.

“It makes it easier to get context, it requires only one fact-checker’s review, it works for a range of ratings, and it doesn’t create the negative reaction that strong language or a red flag can have,” the Facebook team said.

Last month Facebook joined global news organizations in an initiative aimed at identifying “trustworthy” news sources, in the latest effort to combat online misinformation.

Google, Microsoft and Twitter also agreed to participate in the “Trust Project” with some 75 news organizations to tag news stories which meet standards for ethics and transparency. (READ: Google, Facebook join news organizations in ‘Trust Project’)

Google, Twitter and Facebook have come under fire for allowing the spread of bogus news – some of which was directed by Russia – ahead of the 2016 US election and in other countries.

Facebook chief executive Mark Zuckerberg used a quarterly earnings call last month to address criticism of Facebook for allowing disinformation and manipulation during the 2016 US presidential election.

“We’re serious about preventing abuse on our platforms,” Zuckerberg said.

“Protecting our community is more important than maximizing our profits.” – Rappler.com

Add a comment

How does this make you feel?

There are no comments yet. Add your comment to start the conversation.