SUMMARY

This is AI generated summarization, which may have errors. For context, always refer to the full article.

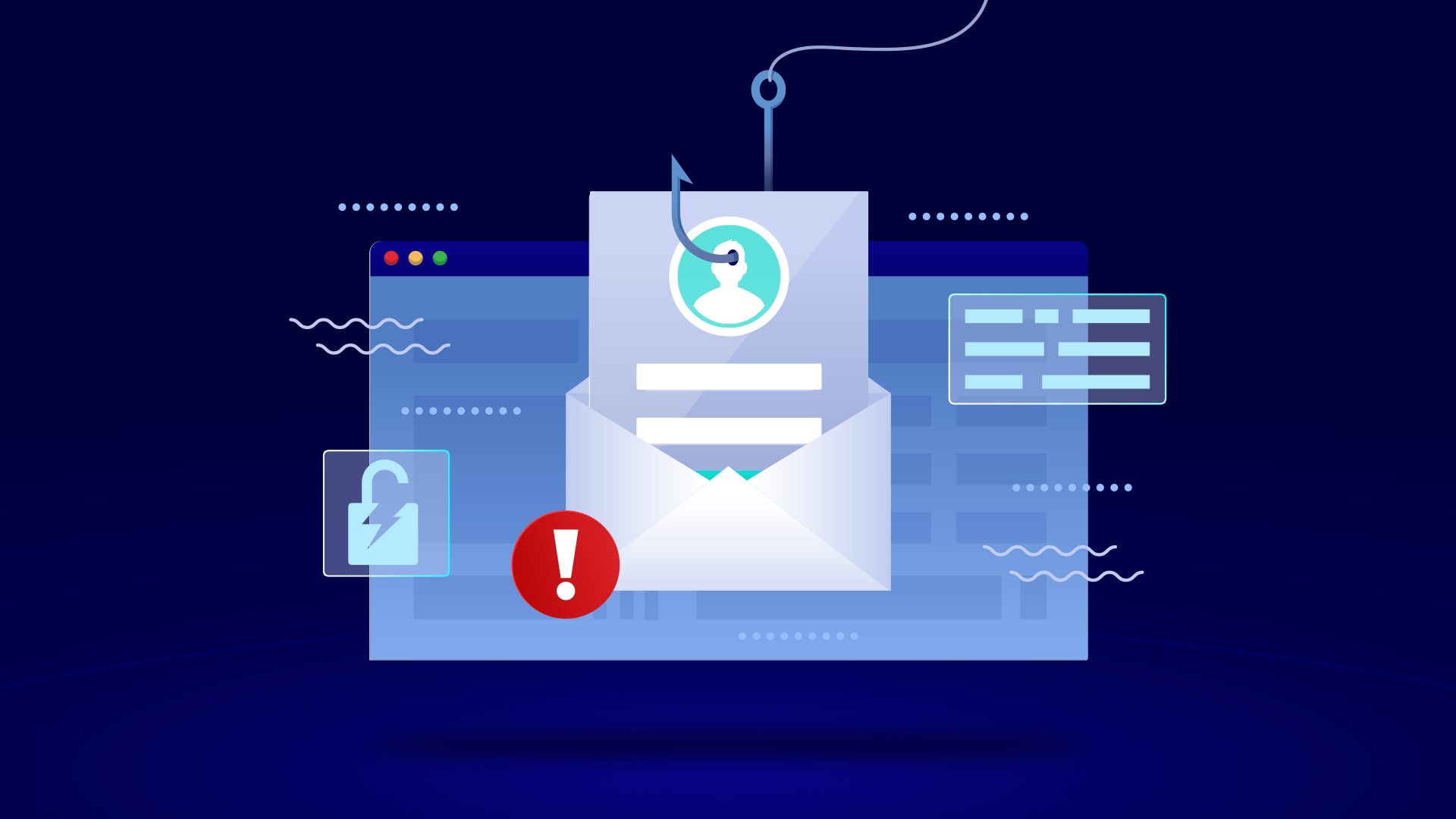

MANILA, Philippines – British-American cyber defense company Darktrace revealed in an April 2 blogpost that the risk of people falling prey to malicious emails and “novel social engineering attacks” appears to be increasing, with the company juxtaposing the rise against the backdrop of increased adoption of generative AI like ChatGPT.

The blogpost by Max Heinemeyer, chief product officer at Darktrace, cited findings by the Darktrace Research arm pointing to “a 135% increase in ‘novel social engineering attacks’ across thousands of active Darktrace/Email customers from January to February 2023, corresponding with the widespread adoption of ChatGPT.”

In this case, Heinemeyer explains that a novel social engineering phishing email deviates strongly from the average phishing attack in term of linguistics.

“The trend suggests that generative AI, such as ChatGPT, is providing an avenue for threat actors to craft sophisticated and targeted attacks at speed and scale,” he wrote.

Trust comes last

Heinemeyer also described one major problem that generative AI is creating – there are now diminishing returns on security training given the rise of more sophisticated-looking and normal-seeming emails. This also makes work harder for people, especially if they’ve come to distrust the emails they receive.

He anticipates that further erosion in trust in digital communications will continue or get worse, as more types of generative AI are used maliciously to dupe others.

Heinemeyer added, “What happens to the workplace if an employee is left questioning their own perception when communicating with their manager or a colleague, who they can see and hear over a video conference call, but who may be an entirely fictitious creation?”

AI as bane, AI as boon

Darktrace expects the future of AI to also take into account the habits and needs of email users and help them ward off threats. Rather than predict attacks, AI could be used to better understand employee behavior and patterns, based on their email inbox interactions.

Heinemeyer said, “The future lies in a partnership between artificial intelligence and human beings where the algorithms are responsible for determining whether communication is malicious or benign, thereby taking the burden of responsibility off the human.”

While the position taken is one of handing off the burden of responsibility for security by using AI to also combat email security threats, it in effect also sees a world where even more AI – an intrusive one at that – is needed to combat malicious AI and human acts that want to do that very same thing: to break into an individual’s business emails.

It’s not a perfect solution to the malicious use of generative AI, but it also makes for a simpler, yet hefty proposition for businesses and cybersecurity firms alike. – Rappler.com

Add a comment

How does this make you feel?

![[OPINION] Artificial Intelligence: Blessing or a threat?](https://www.rappler.com/tachyon/2024/04/tl-church-ai-04202024.jpg?resize=257%2C257&crop=440px%2C0px%2C1080px%2C1080px)

There are no comments yet. Add your comment to start the conversation.