SUMMARY

This is AI generated summarization, which may have errors. For context, always refer to the full article.

Apple on Wednesday, May 19, US time, announced a set of new features that would improve accessibility across its lineup of devices. Software updates designed for people with mobility, vision, hearing, and cognitive disabilities are set to be rolled out this year.

These include AssistiveTouch to allow people with limb differences to navigate Apple Watch; eye-tracking hardware support for iPad; a smarter VoiceOver screen reader for the blind and low vision individuals; a new service called SignTime that will allow customers to communicate with customer care with the help of a sign language interpreter; and support for bi-directional hearing aids for the hard of hearing.

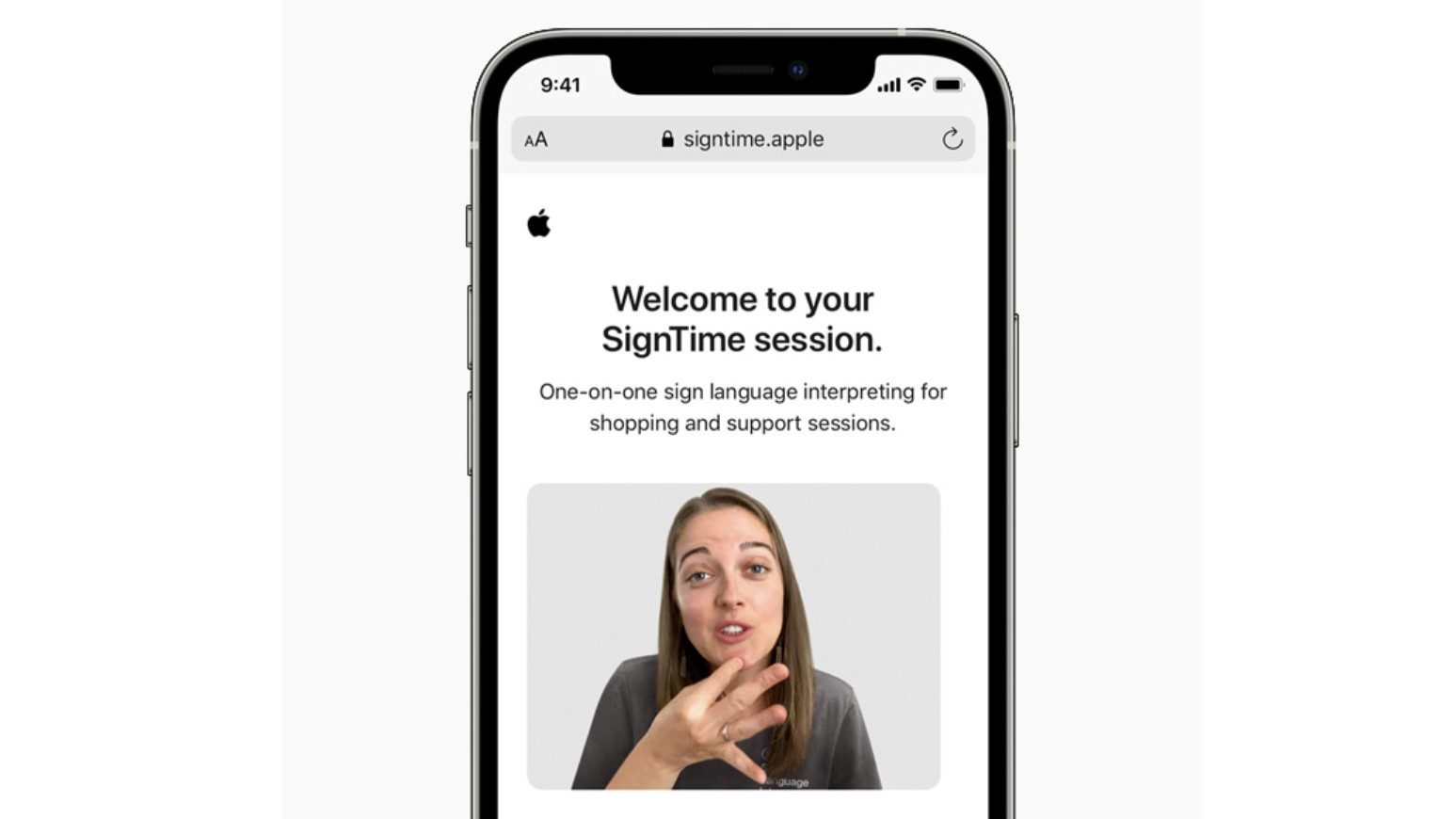

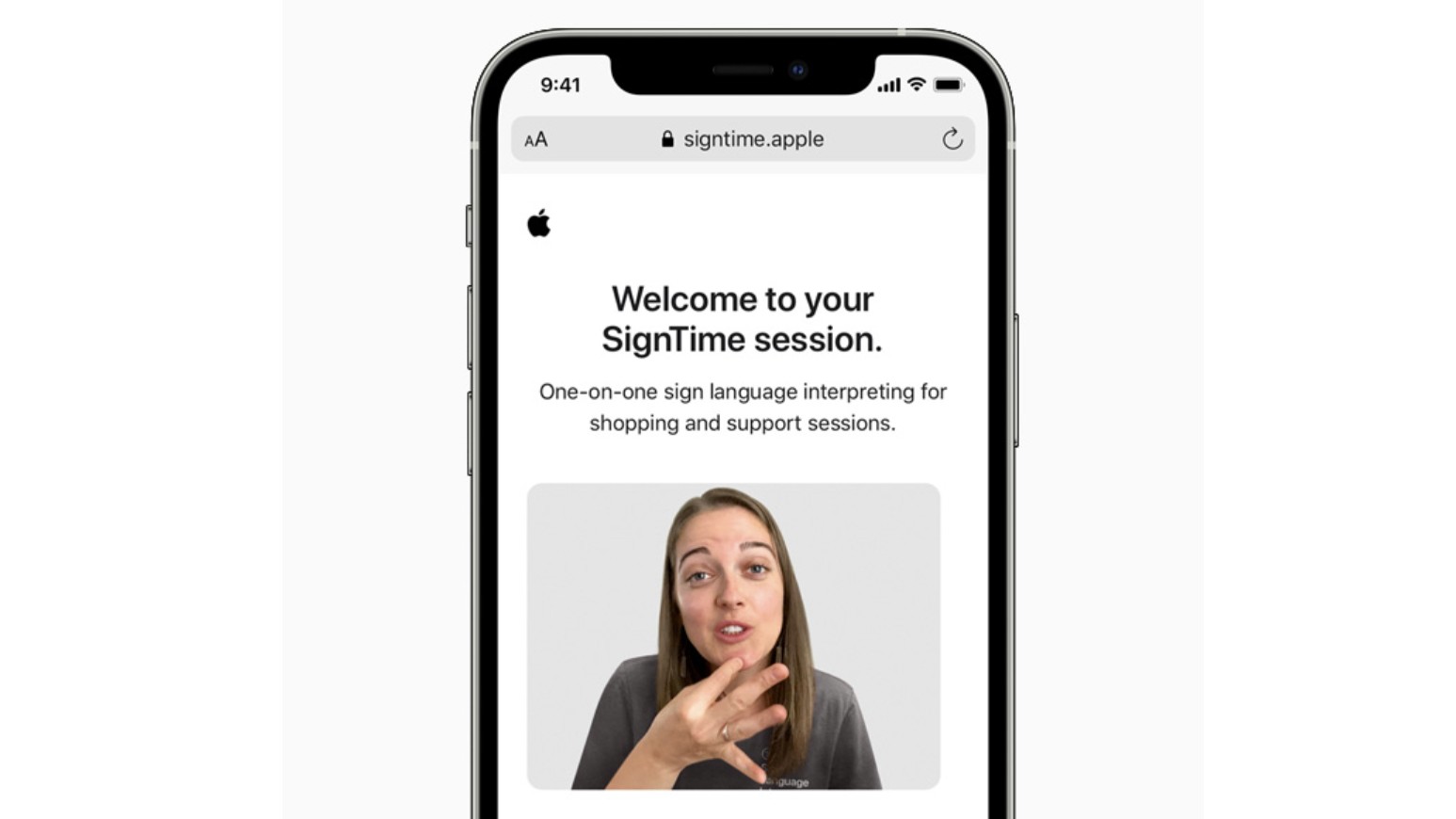

SignTime

SignTime launched on Thursday, May 20, but is currently only available in the US, UK, and France. Apple said it plans to expand to more countries in the future.

How it works is a customer can use SignTime to remotely access a sign language interpreter when visiting an Apple Store location to help them communicate with the store representatives.

Eye-tracking support for iPad

Apple will now be supporting third-party eye-tracking devices for its iPad, which will make it possible for people to control the device with just their eyes. With an eye-tracker, the device will be able to monitor where the person is looking and the pointer will follow accordingly. Extended eye contact, for example, would perform an action like a tap.

AssistiveTouch for Apple Watch

AssistiveTouch will make use of the built-in motion sensors like the gyroscope, heart rate sensor, machine learning, and accelerometer on an Apple Watch to “detect subtle differences in muscle movement and tendon activity” which would allow users with upper body limb differences to navigate the watch.

Apple says this will make it easier to answer calls, control an onscreen motion pointer, and access Notification Center, Control Center, and more.

Improved VoiceOver, hearing aid support

VoiceOver is Apple’s screen reader for the blind. The screen reader has a feature that describes images. The update will improve descriptions, and bring more details about the “people, text, table data, and other objects within images.” VoiceOver will also soon let people navigate a photo better, and will be able to describe a person’s position in relation to the other objects in an image.

For the hearing impaired, Apple is adding support for bi-directional hearing aids, which would allow users to have hands-free phone and FaceTime conversations. It’s also improving automatic leveling of sounds through a feature called Headphone Accommodations.

Apple is also introducing new background sounds “to help minimize distractions and help users focus, stay calm or rest,” which sounds similar to what a meditation app does. Some of the sounds include ocean, rain, or stream sounds. – Rappler.com

Want to try these new features? Purchase Apple products online with this Lazada voucher.

Add a comment

How does this make you feel?

There are no comments yet. Add your comment to start the conversation.