SUMMARY

This is AI generated summarization, which may have errors. For context, always refer to the full article.

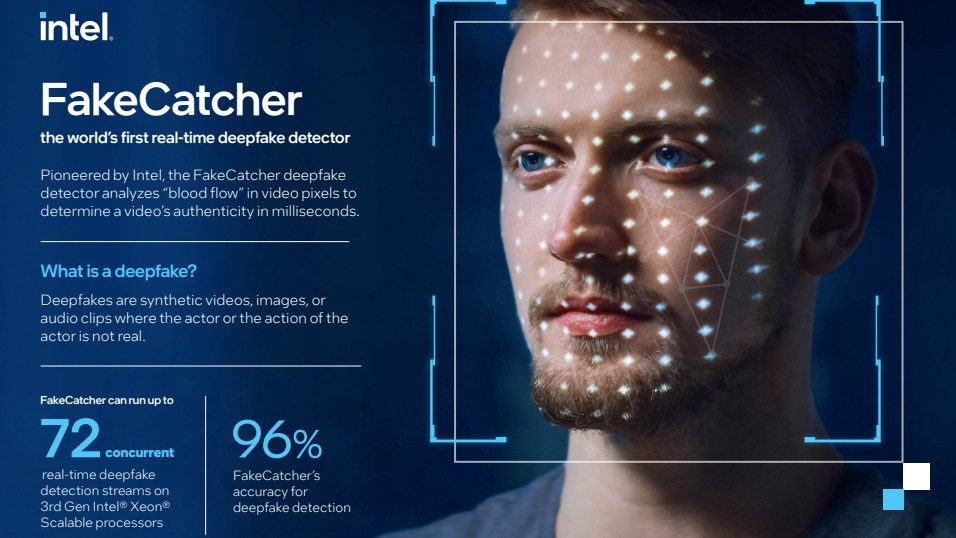

MANILA, Philippines – Intel on Monday, November 14, introduced new software that is purportedly able to identify deepfake videos in real-time. The company claims it’s the world’s first real-time deepfake detector, able to return results in milliseconds with an accuracy rate of 96%.

Deepfakes are videos that make use of AI to convincingly mimic another person’s face to make it appear they are the one saying or doing something.

Intel’s deepfake detection platform is called FakeCatcher, developed by Ilke Demir, senior staff research scientist in Intel Labs, in collaboration with Umur Ciftci from the State University of New York at Binghamton.

Intel, on its blog, explains how the new detector is different from previous efforts:

“Most deep learning-based detectors look at raw data to try to find signs of inauthenticity and identify what is wrong with a video. In contrast, FakeCatcher looks for authentic clues in real videos, by assessing what makes us human – subtle “blood flow” in the pixels of a video.

When our hearts pump blood, our veins change color. These blood flow signals are collected from all over the face and algorithms translate these signals into spatiotemporal maps. Then, using deep learning, we can instantly detect whether a video is real or fake.”

Intel explained that it developed the technology as a reaction to the growing threat of deepfake videos. Citing research firm Gartner, companies will be spending up to $188 billion to combat the threat, considered as a potentially dangerous weapon in the age of disinformation, especially as deepfake videos become even more convincing.

Intel’s solution, which includes a user-facing web interface, is critically done in real-time because as the company says, most detection apps require a long process of uploading videos for analysis, then waiting for hours for results. Before a video could be tagged as fake, it could have spread online, influencing people.

Intel also listed the technology’s potential use cases. “Social media platforms could leverage the technology to prevent users from uploading harmful deepfake videos. Global news organizations could use the detector to avoid inadvertently amplifying manipulated videos. And nonprofit organizations could employ the platform to democratize detection of deepfakes for everyone,” said the company.

The new software is part of Intel’s Responsible AI program.

One potential flaw for the program may be its ability to detect deepfakes among darker skinned individuals as it looks at blood flow. There is evidence, for example, that pulse oximeters, which measure blood oxygen levels by shining a light through a finger, can be inaccurate for people with darker skin. – Rappler.com

Add a comment

How does this make you feel?

![[Good Business] Humanistic national development in the era of AI](https://www.rappler.com/tachyon/2024/06/tl-humanistic-development-of-AI.jpg?resize=257%2C257&crop=284px%2C0px%2C720px%2C720px)

There are no comments yet. Add your comment to start the conversation.