SUMMARY

This is AI generated summarization, which may have errors. For context, always refer to the full article.

In today’s digital age, where the power of public opinion can be weaponized with unprecedented ease, a single tweet can shape the destiny of a global Hollywood blockbuster or make or break a quaint local eatery in Washington DC. This phenomenon, known as review bombing, transcends industries and scales, targeting entities of all kinds.

While negative reviews and ratings might naturally stem from customers candidly sharing their individual negative experiences, the crux of the issue with review bombing lies in its potential to be driven by motives that are irrelevant to the actual quality of the subject being reviewed.

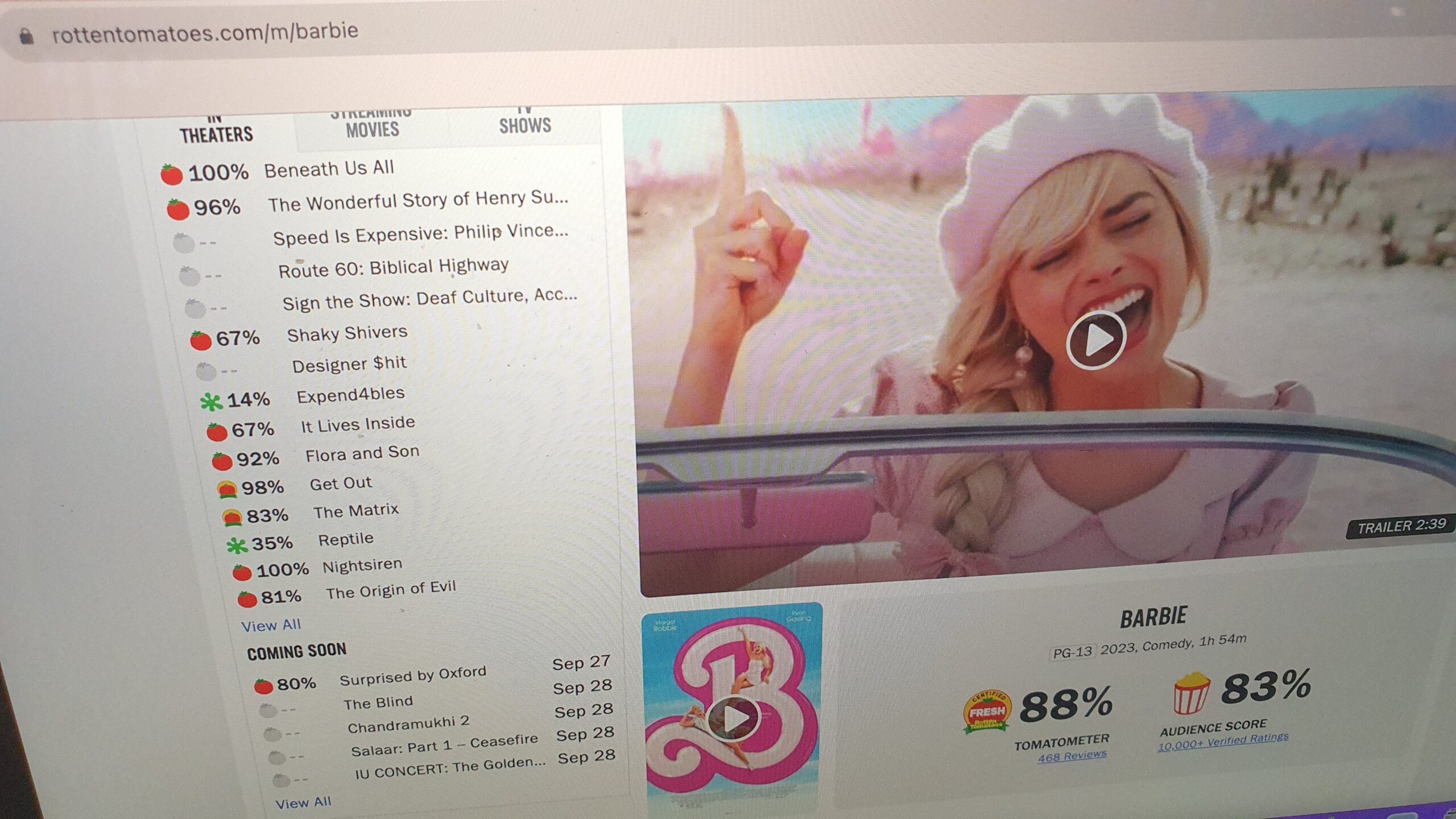

An example of this phenomenon allegedly emerged recently with the case of Greta Gerwig’s film Barbie, as unverified users reportedly branded the movie as “woke” and “anti-men,” subsequently triggering a deluge of unfavorable reviews on platforms like Rotten Tomatoes.

HBO’s video game adaptation of The Last of Us also faced a comparable fate earlier this year due to its depiction of same-sex relationships between characters, while Disney’s live-action film The Little Mermaid became a target of review bombing after being criticized as “another Disney woke-a-thon.”

In games, Starfield was review bombed, with one reason being that the game is exclusive to Microsoft platforms, as the studio behind the game had been part of a recent acquisition by Microsoft.

As review bombing persists across various domains, pivotal questions emerge: How do companies grapple with effectively handling review bombing, and what potential strategies exist for improved management of these activities?

The review bombing conundrum

Rajvardhan Oak, PhD student at UC Davis whose research focuses on review fraud, explained that detecting bot-generated fake reviews could involve several indicators, like a large number of reviews stemming from the same IP address or certain accounts solely producing reviews without engaging in other activities.

Recognizing the clusters of overly similar reviews from similar accounts helps in identifying computer or bot-generated reviews. However, the complexity arises from the fact that review bombing doesn’t mirror the automated fake reviews that are merely spawned by bots, as review bombing often is “a coordinated effort by humans,” according to Oak.

“The signals that you would expect from bot-generated reviews – you are no longer going to see them,” he said.

Human-driven review bombing involves authentic individuals using legitimate accounts, complicating the detection of their occasional false reviews, because unlike fake accounts, these users engage in legitimate activities beyond review bombing, such as leaving genuine reviews on the platform.

“In our research, we collected a dataset of products that had fake reviews associated with them. We found that there are some differences among the content of the review, but they’re not sufficient to directly build a machine learning or data science algorithm,” Oak added.

Even if you do create such an algorithm, Oak explained, the outcome would be that the algorithm will exhibit a concept known as “low precision.”

This essentially means that they can identify negative or false negative reviews, but they also generate numerous instances of incorrect identification by failing to differentiate “false positive” reviews. This presents a challenging issue because, in the effort to eliminate negative reviews, it’s not feasible to do so as one risks also removing a substantial number of positive reviews.

Gang Wang, an associate professor of computer science at the University of Illinois at Urbana-Champaign, also noted that it is “much more difficult” to identify when the activity involves real human users, and that manual moderation may be necessary in such cases.

“Automated algorithms can assist in identifying areas for investigation. For instance, you can look for signals such as a sudden and significant change in review scores over a short period or an unusual spike in the number of reviews received per day or per hour,” Wang said.

The final decisions, however, would require human judgment, unless there are clear signs of bots or automation of computer scripts’ involvement in the activity.

“Often, it has to be reviewed by a group of moderators manually to determine the nature of the review bombing and make decisions based on their platform, terminal services, or policies,” Wang added.

Ongoing battles

False reviews can significantly impact both the entities or individuals being reviewed and potential customers. For those being reviewed, which could include authors, movies, products, or services, false negative reviews can damage their reputation, resulting in financial losses and wasted resources.

For potential customers, false reviews can mislead decision-making, causing them to make choices based on inaccurate information, eroding trust in review platforms.

Yet, fostering a positive platform environment is also in the company’s best commercial interests, noted Yvette Wohn, associate professor at New Jersey Institute of Technology.

“Corporations should have a moral obligation, but even if we put morality aside, if an environment is so toxic that people become upset, they will no longer use it,” Wohn said. “We see that a lot in communities where if it goes too far, people will abandon the community. So it is in the company’s best interests, especially if they want to grow to create a good environment.”

Major platforms have implemented diverse strategies to combat review bombing, though the efficacy of these measures remains unproven.

Last November, Google announced the implementation of a new system on the Google Play Store aimed at proactively preventing inaccurate, coordinated user attacks on app ratings. It stated that its “strengthened system now better detects anomalies and unexplained spikes in low star ratings,” enabling its team to promptly investigate and respond to suspicious activity.

Nonetheless, when asked by Rappler, Google did not specify the specific changes made to enhance their detection capabilities or provide information on the new system’s effectiveness compared to the old one.

Goodreads, meanwhile, claimed that it “takes the responsibility of maintaining the authenticity and integrity of ratings” in its statement, despite failing to address the recent review bombing against writers which ultimately led to Eat, Pray, Love’s Elizabeth Gilbert withdrawing her upcoming novel The Snow Forest from publication.

In an email to Rappler, Goodreads stated that the platform has “increased the number of ways members can flag content to us, improving the speed of responsiveness” as a part of its ongoing efforts to enhance the platform’s ability to detect and manage content and accounts that violate its review and community guidelines.

Fandom, the parent company of Metacritic, noted that the reviews on their sites are removed if they are found to violate its terms of use.

“We are currently evolving our processes and tools to introduce stricter moderation in the coming months,” Fandom told Rappler in an email, though precise measures were not detailed in the company’s response.

Rotten Tomatoes did not respond to Rappler’s request to comment on its efforts to address review bombing.

Meanwhile, James Birt, associate professor of creative media studies at Bond University, underscored the importance of expanding these initiatives, emphasizing that society should take a more comprehensive approach.

“While it’s crucial for review platforms to play a role in this education, it should also extend beyond their scope,” he said. “Integrating discussions on cyber safety, ethical online behavior, and responsible digital citizenship into educational curricula within schools can be immensely impactful.”

Deploying countermeasures

Now, as review bombing persists as a significant concern across various industries and digital platforms, the search for effective solutions becomes paramount. The urgency is exemplified by the recent emergence of new laws targeting anti-trolling, driven by the Federal Trade Commission.

However, as previously mentioned, combating human-generated false reviews presents unique challenges, setting it apart from the task of tackling bot-generated fake reviews.

Oak noted that machine learning and AI could be employed in prevention measures, allowing companies to develop a potential risk metric that calculates the likelihood of a review bombing incident.

For instance, in the realm of novels, platforms could build a dataset based on information such as the book’s topic, the countries it addresses, and the gender of the author, all of which would include historical examples of review bombing.

“One significant obstacle revolves around the development of algorithms that can accurately differentiate between legitimate review concerns and orchestrated review bombing campaigns,” Birt said, further emphasizing the importance of data-based analysis in strengthening the anti-review bombing algorithms.

“To overcome this, analyzing extensive datasets to discern patterns and understand the quantitative and qualitative aspects of reviews becomes crucial.”

Employing verified accounts and authentication measures can help, however, experts acknowledged that it may not entirely prevent genuine individuals from engaging in review bombing.

“We are dealing with real people who are upset for a reason, and they’re submitting under their own name…if you have two-factor authentication, or some authentication, it helps to protect you against anonymous reviews so people can’t write scripts or robot to start posting reviews,” Engin Kirda, professor of computer science at Northeastern University, said.

“But you can’t really prevent crowdsourcing or crowd actions which is what typically happens [in review bombing]. So it helps, but I wouldn’t say it’s the silver bullet. There are limitations,” Kirda added. – Rappler.com

Add a comment

How does this make you feel?

There are no comments yet. Add your comment to start the conversation.