SUMMARY

This is AI generated summarization, which may have errors. For context, always refer to the full article.

MANILA, Philippines – A troubling trend from the days of social media perpetuates in the age of artificial intelligence: the lack of transparency from tech companies as to how their systems work.

Stanford University’s Center for Research on Foundation Models (CRFM) found that 10 major firms’ flagship AI models were lacking in providing transparency in key categories such as what data their systems were trained on, how much they paid laborers to work on the data used for their systems, their capabilities, their risks, and their overall impact on people.

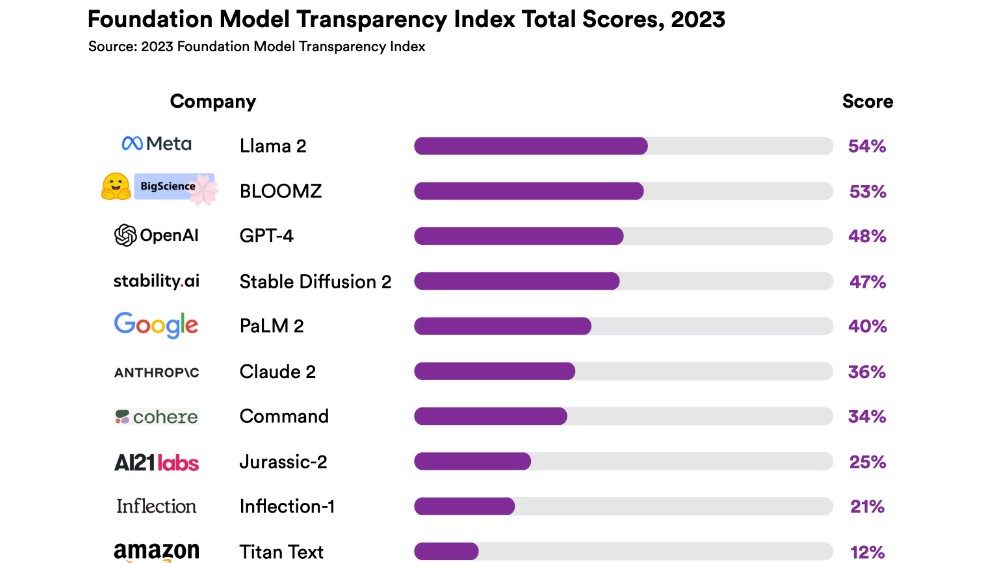

Included in the center’s Foundation Model Transparency Index are Google’s PaLM 2, Stability AI’s Stable Diffusion 2, OpenAI’s GPT-4, and Meta’s Llama 2. The lattermost is the top-scoring model in the index, but even then it only netted 54 out of a possible 100 points. Scoring the worst is Amazon’s Titan Text, scoring 12 out of a possible 100 points.

The CRFM said the developers are “least transparent with respect to the resources required to build foundation models” including data, labor, and the amount of compute used for training.

Meanwhile, the companies are most ready to disclose information on how they protect user data when using their platforms, and some functionality of their models including basic details on how their tech was developed, its capabilities, and limitations.

“No major foundation model developer is close to providing adequate transparency, revealing a fundamental lack of transparency in the AI industry,” the center said.

The index graded the firms on 100 indicators including data sources, harmful data filtration, personal information in data, amount of computing power used, copyright mitigations, user interactions with the AI system, risks demonstration, and review of trustworthiness.

Why transparency matters

The index’s main point is to show how willing the companies are to disclose vital information helpful not only for researchers, experts, and regulators, but also for end users.

For example, in the area of corporate responsibility, even if a company requires a lot of energy, it doesn’t pay its workers properly, or end users are doing something harmful, it still gets a point in the index if it discloses these details properly.

While the end goal is for these companies to be responsible, transparency is the first step, the center’s society lead Rishi Bommasani said.

The lack of transparency from tech platforms is what has led to “deceptive ads and pricing across the internet, unclear wage practices in ride-sharing, dark patterns tricking users into unknowing purchases, and myriad transparency issues around content moderation that have led to a vast ecosystem of mis- and disinformation on social media,” a press release from the CRFM states.

At Rappler’s Social Good Summit in September, Nobel Peace Prize laureate Maria Ressa and Facebook whistleblower Frances Haugen also called for transparency, and for the government to mandate it.

Ressa explained, “There are many things that are structurally embedded into the technology companies that should be illegal, but we don’t know because we don’t have access to the data. That’s what the governments have to do.”

Haugen, who exposed that Facebook knew Instagram had real harms on young girls, said that if transparency is mandated, tech firms would not be able to hide the costs of being bad, and the impact on the companies would not be just reputational.

“The only information [publicly traded] Big Tech companies are required to publish is on the profit and loss numbers…. But once you have data out, you can start doing things,” Haugen said.

Leaders in Britain, the United States, the European Union, and other G7 countries have shown they’re prioritizing the regulation of AI. But the CRFM’s index exposes a huge gap. How does one regulate something that one doesn’t know?

Kevin Klyman, a Stanford MA student, and lead coauthor of the study, said, “In our view, companies should begin sharing these types of critical information about their technologies with the public.”

The index can be accessed here. – Rappler.com

Add a comment

How does this make you feel?

There are no comments yet. Add your comment to start the conversation.