SUMMARY

This is AI generated summarization, which may have errors. For context, always refer to the full article.

MANILA, Philippines – Meta announced on Tuesday, August 22, it had come out with “the first all-in-one multilingual multimodal AI (artificial intelligence) translation and transcription model.”

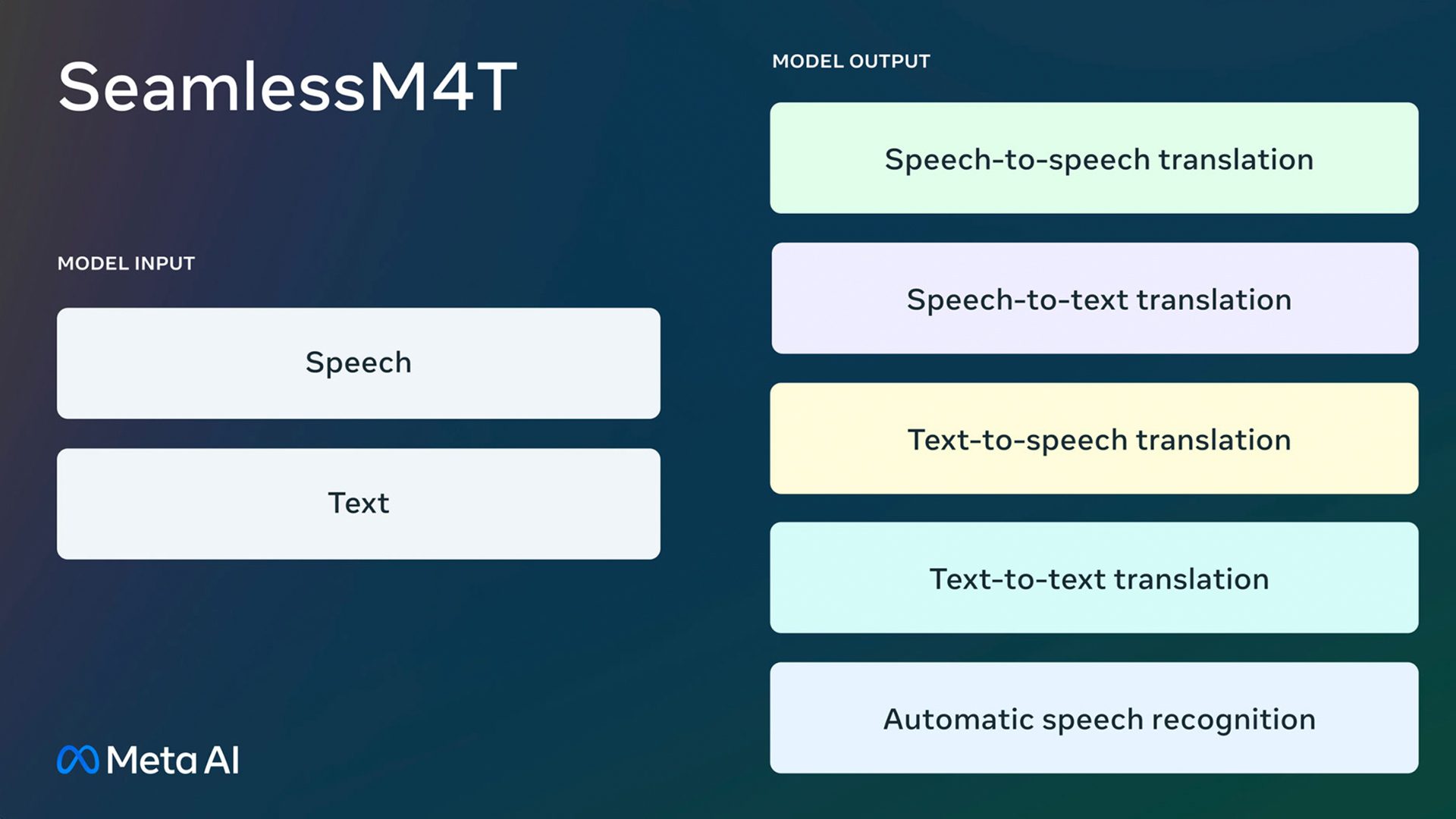

According to a statement from Meta, this model, called SeamlessM4T, “can perform speech-to-text, speech-to-speech, text-to-speech, and text-to-text translations for up to 100 languages depending on the task.”

SeamlessM4T can recognize 100 languages, and does speech-to-text translation and text-to-text outputs for 100 languages. It also does speech-to-speech translation, where you input spoken words from 100 possible languages, and it’ll output spoken translations back from 36 possible languages. Lastly, it will also do text-to-speech translations, supporting “nearly 100 input languages and 35 (plus English) output languages.”

Meta is publicly releasing SeamlessM4T under a research license so researchers and developers can build on what’s been made so far. Alongside this, Meta is also releasing the metadata of SeamlessAlign, which it calls “the biggest open multimodal translation dataset to date, totaling 270,000 hours of mined speech and text alignments.”

Ars Technica, in its report, notes a wrinkle in the research paper explaining how SeamlessM4T works, as it was vague regarding where the data came from to train the artificial intelligence.

The SeamlessM4T research paper said Meta’s researchers “created a multimodal corpus of automatically aligned speech translations of more than 470,000 hours” which is the SeamlessAlign dataset they have. Meta said it then “filtered a subset of this corpus with human-labeled and pseudo-labeled data, totaling 406,000 hours.”

While Meta says text data came from “the same dataset deployed in NLLB” – which Ars Technica explained was sentence sets that were pulled from Wikipedia, news sources, scripted speeches, and additional sources, then translated by professional human translators – the SeamlessM4T speech data came from “4 million hours of raw audio originating from a publicly available repository of crawled web data.” The paper said a million hours of this was in English.

Meta, however, did not specify where the audio clips used came from.

More information on Meta’s SeamlessM4T project is available through a blog post on its AI arm. Meanwhile, the actual trained neural network files, Ars Technica noted, is available through Hugging Face. – Rappler.com

Add a comment

How does this make you feel?

There are no comments yet. Add your comment to start the conversation.