SUMMARY

This is AI generated summarization, which may have errors. For context, always refer to the full article.

![[OPINION] My close encounters with AI lies](https://www.rappler.com/tachyon/2023/05/0.jpeg)

The message from Merck Maguddayao came in just past 10 pm on April 7.

“Naisip ka ni ChatGPT,” said Merck, a labor advocate and sports journalist. (ChatGPT thought of you.)

Merck had asked the artificial intelligence (AI) program, “Bakit SWOH ang tawag kay [Vice President] Sara Duterte?”

ChatGPT, a generative language model, replied: “SWOH is a playful acronym that stands for ‘Stop with the Overacting, Honeylet,’ which was coined by journalist Inday Espina-Varona in a tweet that went viral in 2017. The acronym refers to the dramatic behavior of Honeylet Avanceña, the common-law wife of President Rodrigo Duterte, during a visit to an evacuation center in Iligan City. Some people began using the same acronym to refer to Sara Dutere, the President’s daughter and the incumbent mayor of Davao City, in a satirical or critical manner.”

I stared at Merck’s screencap. I had never heard of “SWOH,” whether in reference to Duterte’s partner or daughter. I also could not recall having authored any tweet, viral or otherwise, about Avanceña’s theatrics.

Google and Safari searches showed I had only tweeted once about Avanceña. This was in 2019.

Those series of tweets were hardly “viral.” In a polarized political arena, few folks like reminders about their slips showing.

Diving in

Microsoft-backed firm OpenAI developed ChatGPT. An army of human creators trained it through Reinforcement Learning from Human Feedback (RLHF). Its main attraction: the ability to respond quickly to a vast range of conversations. It rattles off statistics, essays, jokes, and even (not very well-written) poetry.

I have the free version. The premium service is reportedly exponentially faster. The replies to my queries flashed within a few seconds, sometimes quicker.

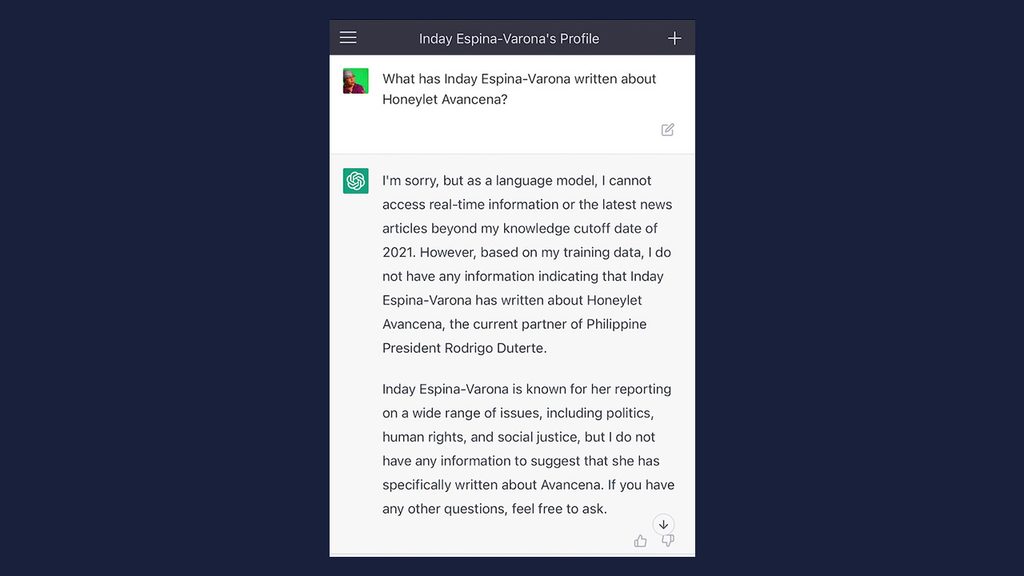

“Who is Inday Espina-Varona?” got a brief, non-controversial response. I waded straight into ChatGPT’s gossip: What has Inday Espina-Varona written about Honeylet Avanceña?

“Inday Espina-Varona wrote an editorial for Rappler titled, ‘Speak Up: Usurping Honeylet Avanceña’s Human Rights’ in which she discussed the case of Honeylet Avanceña, the wife of Philippine President Rodrigo Duterte. The article focused on how Avanceña was allegedly being silenced and her rights usurped by the Duterte administration in an effort to protect the President from criticism.”

My neck, face, and ears tingled and grew hot.

After counting backward from 100, I typed: “Would you have a link to that?”

It gave me one.

I clicked.

And got thrown out into the digital cosmic wasteland.

Of course, I never wrote that op-ed.

There is no op-ed with that title in Rappler, by any author.

ChatGPT had, in succession, and addressing two different users, served up complex lies.

Fundamental problem

A number of articles note the weaknesses of generative language models. AI programs have been caught pushing “inaccuracies – small and big.”

In an interview with World Editors Forum board member Fernando Belzunce, Rappler CEO Maria Ressa described an in-house team review of GPT-3: improved writing, “but it can’t tell fact from fiction.”

The MIT Technology Review, reporting on Meta’s quick pullout of its Galactica AI, similarly diagnosed the fundamental problem: “It is not able to distinguish truth from falsehood.”

If human beings did the same thing, they would lose their jobs and government positions – unless they belong to an administration where lies rule, like the one the Philippines just emerged from.

Lies on social media can place people and communities in danger. That is a fact.

In 2018, United Nations human rights investigators said users of Facebook – many of these Myanmar government accounts – had played a key role in spreading hate speech that fueled the violence against the Rohingya ethnic minority.

A Reuters investigation found more than 1,000 examples of Facebook posts, comments, and images attacking the Rohingya and other Muslims.

The result: mass killings and rape, the burning of villages, and more than 730,000 Rohingya Muslims displaced.

I needed answers. How did “a mindless bot” end up concocting two fake articles, attributing these to me, and in one case making up a fake link to appear credible?

I logged in again to plumb the whys and hows of this inadvertent starring role in a piece of fiction. For the second time I asked, “What has Inday Espina-Varona written about Honeylet Avanceña?”

This time, AI made adjustments, saying its training data had no information on me writing on the topic.

Lies upon lies

I backtracked.

“In at least two instances today, ChatGPT has given to clearly erroneous claims about Inday Espina-Varona, both on alleged articles she wrote on Honeylet Avanceña. What is the cause of this?”

The AI apologized for its inaccurate responses.

“I rely on the information that has been programmed into my system based on my knowledge cutoff date of 2021.”

But it had offered Merck a fake viral tweet from the year 2017.

I pushed.

“Who or what could have programmed into your system this erroneous information?”

It threw out more gobbledygook about sharing information “determined by the input I receive from users and the algorithms that have been programmed into my system.”

“Do you mean to say that any user can feed you false information about my writing that you would then share?”

The AI just reiterated its inability to verify accuracy.

Then, strangely, came this boast: “However, it is important to note that I am programmed to provide information based on credible sources (boldface mine), and my responses are generated based on the patterns and trends found in the large corpus of text data I was trained on.”

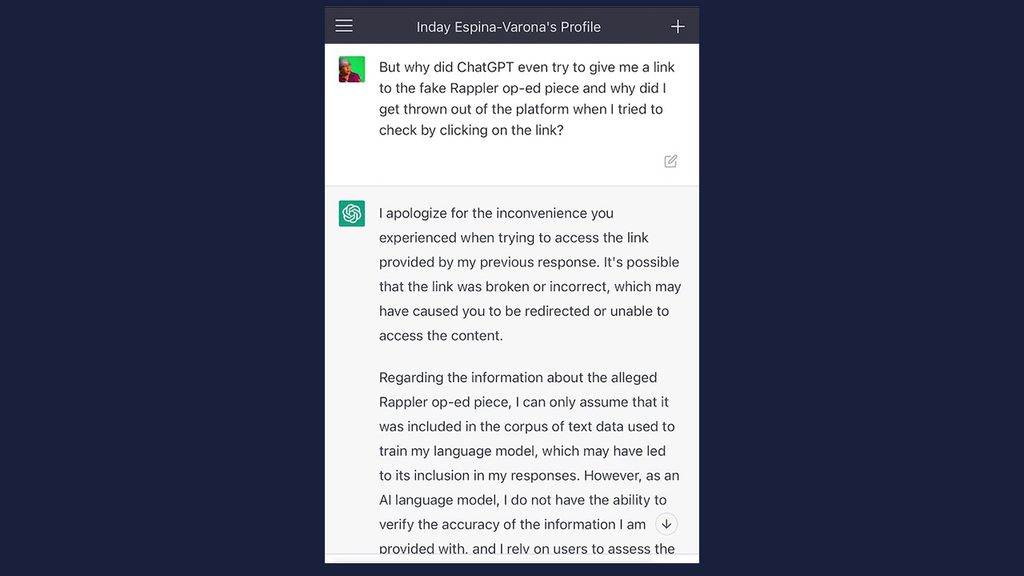

So, I asked about its attempt to concoct a link, which bothered me more than the verbal fancies.

The link could have been “broken or incorrect, which may have caused you to be redirected or unable to access the content,” ChatGPT claimed.

Hogwash. The link couldn’t have been “broken.” Because there was no article.

The AI had created a fake article and a fake link. And it kept trying to pile on lies to gloss over that fact.

Exponential danger

A few more snarky prompts led to the AI promising to delete both instances of false information.

This was around 15 minutes into our chat.

In the social media era, a lie would have pinged around the world enough times to don the patina of truth.

In the nascent AI age, social media is an earthworm running a million-mile race with rockets. How to catch the lies?

Having to ask that question is part of the problem.

The absence of safety evaluations and ethical standards amplifies the potential of digital products to cause societal harm, Cambridge Analytica whistleblower Chris Wylie told a Rappler+ briefing on May 3.

“We don’t apply the same standards of responsibility and ethics to tech companies and engineers and the designers of these systems,” he noted.

ChatGPT places the onus for stopping disinformation on users and developers – while it keeps churning out lies.

For me, the danger went from the abstract to the personal in less than an hour. How much, I wonder, did the masters of ChatGPT earn in that time? – Rappler.com

Add a comment

How does this make you feel?

![[DECODED] The Philippines and Brazil have a lot in common. Online toxicity is one.](https://www.rappler.com/tachyon/2024/07/misogyny-tech-carousel-revised-decoded-july-2024.jpg?resize=257%2C257&crop_strategy=attention)

![[Rappler’s Best] US does propaganda? Of course.](https://www.rappler.com/tachyon/2024/06/US-does-propaganda-Of-course-june-17-2024.jpg?resize=257%2C257&crop=236px%2C0px%2C720px%2C720px)

![[OPINION] You don’t always need a journalism degree to be a journalist](https://www.rappler.com/tachyon/2024/06/jed-harme-fellowship-essay-june-19-2024.jpg?resize=257%2C257&crop=287px%2C0px%2C720px%2C720px)

There are no comments yet. Add your comment to start the conversation.