SUMMARY

This is AI generated summarization, which may have errors. For context, always refer to the full article.

On Tuesday, February 6, 2024, concerned individuals alerted Rappler about a deepfake video which made it appear that Nobel Peace Prize laureate and Rappler CEO Maria Ressa said she had been earning from the cryptocurrency Bitcoin.

The deepfake video manipulated a November 2022 interview of Ressa by American talk show host Stephen Colbert in his show.

It was circulated using a newly created Facebook page and an ad on Microsoft’s Bing platform. Microsoft and Facebook have since taken down the post and the ad that circulated the deepfake.

Using digital fingerprints left by the fakers, a follow-up investigation where Rappler collaborated with Swedish digital forensic group Qurium linked the deepfake to a Russian scam network. The investigation also indicated that the campaign specifically targeted Philippine audiences.

Spotting the deepfake

The deepfake video was initially spotted on January 25, 2024, on the Facebook page, “Method Business,” which was created just a few days before.

Twenty-one hours after the video was posted, it had already garnered 22,000 views. (See screenshot below.)

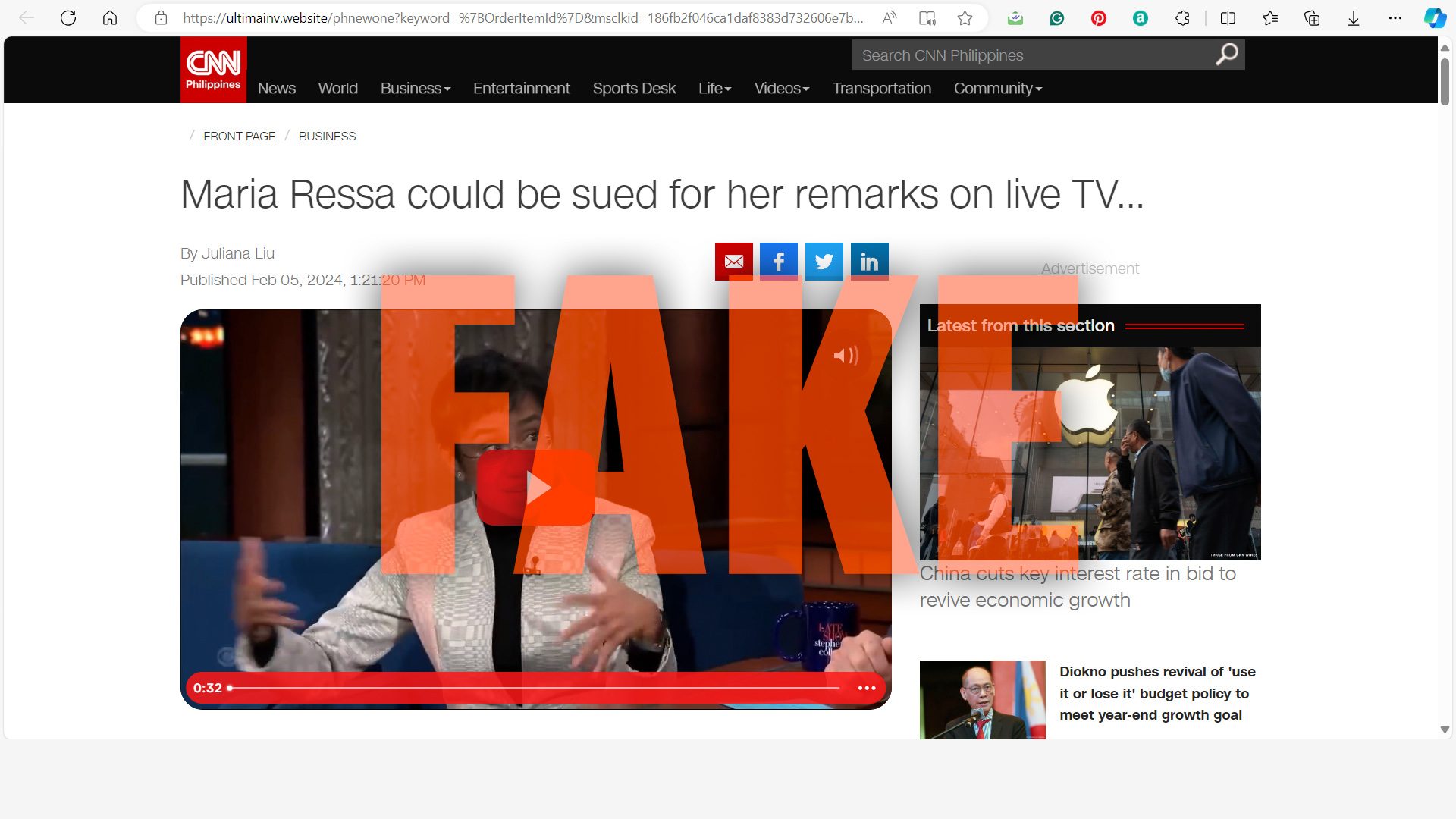

Rappler was also later alerted to a webpage, hosted under URL ultimainv.website, which alternately cloned the story pages from the websites of Rappler and CNN Philippines. The impostor site was promoted through an ad served on Microsoft’s Bing platform. (Ad encircled by Rappler in the screenshot below)

Ultimainv.website is a newly registered domain. The first record on its domain history is dated January 10, 2024.

The creators of the deepfake video manipulated a November 2022 interview by Colbert where he asked Ressa questions about her book, How To Stand Up To A Dictator, and the cases filed against her and Rappler upon the instigation of former Philippine president Rodrigo Duterte.

The manipulated video used a fake voice, generated by AI, which mimicked Ressa’s voice. Most of the time, the fake video showed her speaking in sync with the audio. But there were a number of times that the syncing failed, which was the strongest indication that it was a deepfake video. Watch the video embedded below which compares the original interview with the manipulated video that had the AI-generated audio.

The video may look convincing to a user who isn’t paying attention or isn’t aware of how AI technology can copy voices or how a deepfake video might look. Rappler reported the video to Meta, and it has since been removed, but the page, Method Business, remains active.

More than an effort to scam people into investing in bitcoin, the fake articles published on webpages mimicking Rappler and CNN Philippines also implied that Ressa was involved in the scam.

Both headlined, “Maria Ressa could be sued for her remarks on live TV,” the fake CNN Philippines and Rappler articles both claimed that Ressa’s career “hangs in balance” supposedly following remarks she made on live TV. The ad which promoted the impostor websites used the text: “The end for her?” as lead, implying that Ressa was embroiled in a scandal.

The fake articles also claimed that the live broadcast was supposedly interrupted following demands by leading banks to stop it and erase the recording. (See screenshots of fake articles below.)

Russian origin, targeting a Philippine audience

The pages where the video and the fake Rappler and CNN Philippines articles were posted were also engineered to be viewed only through Philippine internet service providers (ISPs). Investigators who looked into the deepfake had to employ various techniques to view the impostor sites from their location. This implies that those behind the deepfake were specifically targeting Filipinos.

CNN Philippines’ website had already shut down on February 1, 2024, days before the February 5, 2024 publication date of the fake article.

A follow-up investigation with Swedish digital forensic group Qurium Media discovered later that the clone sites were part of a fraudulent online network that tricks victims into paying for a product that usually ends up as an empty box or a random low quality object when delivered.

The network, according to Qurium, appears to be of Russian origin.

Links found in the network also showed the use of Cyrillic script, and a timezone time stamp of GMT+3 (Moscow, St. Petersburg), which, Qurium said, is “solid indication” but “not conclusive proof” that the network is of Russian origin.

The content on the platform used to set up the fake websites is in Russian language.

Qurium analyzed domain registration and hosting information data of the fake sites, metadata from images and the embedded video on the fake sites, and metadata from the original articles scraped from the original CNN and Rappler websites to create the fake pages.

The campaign was in operation from November 28, 2023 to February 25, 2024.

Qurium’s analysis of the network shows an elaborate scheme that skirts legal accountability by dividing the operation into separate entities, allowing for deniability and anonymity.

The scam network, as discovered by Qurium, uses a multi-role scheme that ensures no one will be held accountable for the scam. These are the roles:

- The affiliate advertiser or the publisher

This is the webpage, collecting the names and phone numbers of potential victims to an intermediary, which, in this case, is the “M1 Shop.” Qurium said that on their site, “they make clear that they just advertise goods and they are not responsible for anything related to the merchandise.”

The affiliate advertiser in this case was identified as “TD Globus Contract.” Aside from ultimainv.website, which hosted the fake CNN and Rappler pages, TD Globus Contract was found to have links to more than 40 other websites with ties to the scam network.

- The intermediary

The intermediary (M1 Shop) takes the victims’ info from the publisher, and forwards it to the advertiser for a fee. They are responsible for paying the publisher for the information they receive or the clicks the publisher’s webpage generates that send victims to the M1 Shop.

- Advertisers

Advertisers create the fraud offerings, which, in this case, have ranged from nutrition goods to cryptocurrency offers.

Qurium explained that the fraud works as the advertisers’ identities are protected by the intermediary, while publishers or webpages are changed “once their reputation has been compromised.”

“Ultimately none takes responsibility for the fraud,” Qurium said. “The websites that promote the products are registered under fake companies, and claim that they do not know the final product vendors, and the advertisement network claims that they do not monitor what is promoted in their platform. Something is guaranteed though, victims get scammed and everyone in their network gets paid for their ‘services.'”

Meanwhile, M1 is playing a “brokering role” in the scam network, hiding the malicious advertisers from scrutiny.

Rappler has already reached out to M1 shop via the Telegram accounts on its website. We will update this story when we receive a response from M1.

Potential harm

Deepfake videos can be convincing and powerfully persuasive, which is why scammers would attempt to use it.

However, there are other potential motivations. In India, politicians have used deepfakes to malign opponents or confuse the electorate. In Taiwan, deepfakes and cheap fakes were spread online ahead of the 2024 presidential elections. In Indonesia, deepfakes had been in use, too, in the lead up to their own presidential elections.

The nature of disinformation is not in the outright lie at times. Oftentimes, it could be merely to plant a seed in the minds of audiences that could later be exploited, Qurium added in its report.

Regardless of the motivation, potentially, deepfakes can be used to sway public opinion, like a very powerful lie, which is especially harmful in situations such as national elections and fast-moving scenarios like situations of violence, for example.

In this specific case, if the intent was to scam people, the scammers may have thought that using a Maria Ressa deepfake could convince some to invest in a product that ultimately doesn’t exist. The fake campaign may have attempted to leverage a journalist’s reputation as an authoritative voice, at her expense.

Other figures of authority can be victimized, too, putting their reputations on the line. While Rappler and Qurium were investigating this specific deepfake, a similar one which involves a Filipino businessman was also circulated through what appears to be the same process. Very recently, deepfakes which used GMA7 Network anchors were used to promote a necklace supposedly coming from the Vatican.

There are other possibilities. What is curious about the Maria Ressa deepfake is that the ads on Bing, which were promoting the impostor CNN Philippines and Rappler sites, did not appear to be promoting a product. They were implying a scandal involving Ressa.

“What’s particularly concerning (about the Ressa deepfake) are the layers of malicious intent behind a single act of digital manipulation and the consequent harms these inflict,” De La Salle University Communications professor Cheryll Soriano told Rappler.

Soriano, who has been doing research on disinformation on platforms like YouTube, was one of those who spotted and alerted Rappler about the deepfake video. She said: “First, it (the deepfake video) attempts to push a scam. Second, it makes the scam appear news-like, pretending as Rappler and CNN Philippines, undermining the credibility of news organizations. Third, it is clearly motivated to discredit Maria Ressa by maligning her reputation.”

Soriano added that the deepfake also “perpetuates a disturbing trend of misogynistic practices, utilizing deep fakes to humiliate women. Even if the scam itself fails, it still exposes and perpetuates the latter three, spreading them across networked publics.”

Addressing the deepfake threat

There is a general consensus about fact check groups and disinformation experts that the deepfake problem will only continue to grow in the future now that generative AI technologies are readily available to ordinary citizens.

This makes nuanced and immediate platform action critical to mitigate their impact.

In various public statements, the platforms used to circulate the Maria Ressa bitcoin deepfake – Facebook and Microsoft – have both been saying they have programs addressing deepfakes.

In a recently released statement, Meta, the owner of Facebook, said it is working with industry partners on common technical standards for identifying AI content, including video and audio. It said it will label images that users post when they detect these industry standard indicators. It also said it has been labeling photorealistic images created using Meta AI since the product was launched.

In an email interview with Rappler, a Microsoft spokesperson said they have closed 520,000 accounts engaging in fraudulent and misleading activity since April 2023, using both automated and manual methods. The company said that they build upon their knowledge base with each incident to help them detect similar ones in the future, and that they have instituted Information Integrity and Misleading Content policies.

The Microsoft spokesperson also said they are investing in technology to detect deepfakes. “AI is also being harnessed to identify deepfakes. Major players like Microsoft are investing heavily in developing technologies to detect these sophisticated forgeries.”

Microsoft is also part of a group of tech companies and news organizations that have been working with Adobe on the Content Integrity Initiative, an effort that promotes the adoption of an open industry standard for content authenticity and provenance.

Challenges to detecting AI-generated fakes

There are, however, challenges to automated detection of AI-generated fakes.

The Microsoft spokesperson said that bad actors employ sophisticated techniques to elude detection.

“AI technology is advancing at an unprecedented pace, leading to the daily emergence of new platforms worldwide that enable the creation of deepfakes. Ideally, companies producing such content would implement watermarking or similar technologies to facilitate easy detection. However, there are challenges: technology capable of removing watermarks already exists,” the spokesperson said.

Most technologies for creating deepfakes are also open-source, referring to software that is made freely available and may be redistributed and modified by anybody. Microsoft says this allows for modification or deletion of the watermarking code, “complicating detection efforts.”

The practice of making the source code of large language models (LLMs) available for anyone to examine, copy, and modify has played a critical role in accelerating the development of generative AI technologies. In fact, even big tech companies released versions of their own LLMs to the public.

In July 2023, Meta released an open source version of Llama, its artificial intelligence model. More recently, Google also released Gemma, the open source version of Gemini, its proprietary AI model.

While open sourcing the language model may help make them more transparent and auditable, some experts note that the biggest threat in unsecured AI systems lies in ease of misuse, making these systems “particularly dangerous in the hands of sophisticated threat actors.”

Preventing AI misuse, holding fakers accountable

Since even the source code of AI models are now accessible to anybody for them to freely modify, having the capability to trace the source of a particular deepfake becomes very important.

As illustrated above, people behind fakes usually leave traces of who they are when they use digital systems. However, much of the data that could trace accountable actors still reside in platforms. “The greatest challenge of disinformation is not the lies, but in the lack of accountability of those that disseminate the fake information,” Qurium said.

The Swedish group also criticized platforms like Meta and others that profit from it, and allow the disinformation to thrive.

The Microsoft spokesperson acknowledged that the use of deepfakes is “a new and emerging threat,” and that platforms need to do more. “We need to do more at Microsoft, and there are roles for all of industry, government, and others.”

The company said that aside from working with law enforcement, and other legal and technical steps, they’ve also recently “helped move forward an industry initiative to combat the use of deepfakes to deceive voters around the world, and we’ve advocated for legislative frameworks to address AI’s misuse.”

A Meta spokesperson told Rappler via email, “Meta’s Community Standards apply to all content and ads on Facebook, regardless of whether it is created by AI or a person, and we will continue to take action against content that violates our policies. We have removed the reported video for violating our Fraud and Deception policies.”

Whether these measures are enough to address the avalanche of deepfakes, that those fighting disinformation are expecting to deal with more and more in the coming months, remains to be seen.

Days after Microsoft took down the ad promoting ultimainv.website, a new ad popped up again on the platform. The ad linked to a site with the same content as the previously mentioned Rappler impostor site, but on another newly-created domain.

The game of whack-a-mole continues. – Rappler.com

Help us spot suspected deepfakes by emailing dubious content you find on social media to factcheck@rappler.com

This special report was produced with support from Internews

1 comment

How does this make you feel?

![[ANALYSIS] Cognitive warfare: The tip of China’s gray zone spear](https://www.rappler.com/tachyon/2024/05/cognitive-warfare-china-may-18-2024.jpg?resize=257%2C257&crop=472px%2C0px%2C1080px%2C1080px)

![[DECODED] The Philippines and Brazil have a lot in common. Online toxicity is one.](https://www.rappler.com/tachyon/2024/07/misogyny-tech-carousel-revised-decoded-july-2024.jpg?resize=257%2C257&crop_strategy=attention)

It may be a test run for its application in the coming 2025 Philippine Midterm Elections. It will make such an election advantageous to those who have the money to hire those makers of deepfakes—woe to the candidates who cannot afford to hire them.