SUMMARY

This is AI generated summarization, which may have errors. For context, always refer to the full article.

![[ANALYSIS] Part 1: Survey says or not](https://www.rappler.com/tachyon/2021/10/opinion-survey-says-or-not-sq.jpeg)

Part 1 of 3

This is the first of a three-part series based on the 20th Jaime V. Ongpin Memorial Lecture on Public Service in Business and Government delivered last October 27, 2021.

As of October 8, 2021, for the sole positions of president and vice-president, as well as 12 senators, a total of 302 persons have filed for candidacies. Although there have been some who subsequently backed out, and there is speculation of last-minute substitutions by middle of November, the big pool of choices makes one wonder what is it that attracts some people to run for elective office, especially given the myriad of problems we are going to face next year? The late comedian Dolphy was once asked whether he was running for office, and reportedly responded, “Madaling tumakbo, eh, paano kung manalo?”

Even before the 2022 election fever started, polls through Facebook, SMS and the internet through media websites were conducted to get a sense of our voting preferences. Face-to-face surveys have also been done by new pollsters, even as early as the beginning of the year. But should we trust the results of all these surveys?

The week before candidacy applications were submitted, Pulse Asia released results of a survey on our voting preferences, and pundits started giving their take on these poll results under assumptions that presidential daughter Sara Duterte would or would not run, on whether Vice President Leni Robredo would or would not run. New survey outfits are coming into the survey scene, even relying more on ways of digital capture of data.

Lian Buan of Rappler asked my views about Kalye Survey, reportedly a real-time, real-man survey where more than 2,000 man-on-the-street respondents were reportedly randomly selected and interviewed in sidewalks and markets in various areas in Metro Manila and neighboring Calabarzon. I pointed out that going out on the street and randomly picking people will not work to get a national reading or even a reliable reading of these areas, as not every voter in a city may pass through the street, and what exactly do they mean by random selection? Media should not give credence to any polls that are not designed well to yield representativeness.

And speaking of representativeness, supporters of some presidential aspirants have been ecstatic that their candidate of choice seems to be doing well on Google Trends, but that depends on what term you use. Unfortunately, big data sources like Google Trends are also unreliable for tracking national views, since not everyone is on the net, and not everyone who is on the net knows how to Google. If Google Trends data would be a good representation of netizens, which I sincerely doubt, they would at best reflect the upper 10% of the socio-economic strata. Further, such data is likely to be inflated by trolls, who are employed by all sides of the political spectrum, though perhaps some more than others depending on the size of their campaign war chest.

Often, we think that opinion poll data are gospel truth until we notice discrepancies in survey findings. One of my FB friends posed several questions on a post (Figure 1): (a) should we trust surveys, especially if surveys only sample 2,000 respondents for a population of 63 million voters. (b) Who commissioned these surveys and whether these survey results are biased by who finances them, and (c) do these surveys have a mind conditioning effect on public opinions?

In the days to come, we should expect more polls to be reported so I thought it would be best to discuss more closely the Philippines’ survey scene. In this first of a three-part series, I will describe the science behind sample surveys. The second part identifies several opinion poll outfits and the people leading these firms, and then describes methods and practices used by two well-known firms with track records. The third part discusses trends in survey results, as regards approval/satisfaction ratings of presidents, and the voter preferences data. I end the series with practical advice to the media and the public. I also call upon survey outfits to be transparent about their methods, and the persons/institutions funding their operations, as these matters ultimately reflect the credibility of their data, their institutions, and the Statistics discipline and profession.

What is a survey?

In a more general setting than an opinion poll, a book by Robert Groves et al. on Survey Methodology defines a survey as a “systematic method for gathering information from (a sample of) entities for the purposes of constructing quantitative descriptors of the attributes of the larger population of which the entities are members.” The term “systematic” differentiates surveys from administrative reporting systems, big data and other ways of gathering data. The phrase “sample of” appears in the definition since some surveys, which involve selecting respondents with chance methods, attempt to measure what happens to everyone in the population. The quantitative descriptors, called statistics, are headline summaries estimating the attributes corresponding to the larger population, such as the percentage of voters who will vote for a certain candidate. Results of a survey are weighted appropriately to make them representative of the population, to make a survey statistic, such as the proportion of voters preferring candidate A, yield a good estimate of the corresponding measure in the entire population.

The alternative definition of a survey, “an investigation about the characteristics of a given population by means of collecting data from a sample of that population and estimating their characteristics through the systematic use of statistical methodology” taken from a metadata document released by the UN ECE is quite similar.

What are the uses of a survey?

Surveys are used to gather knowledge and gain insights, to analyze human behavior and welfare, thoughts and opinions. In the Philippines, surveys are used as data sources for many official statistics, especially on PTK, i.e., presyo (prices), trabaho (jobs), kita (income), which are the top-of-mind concerns of Filipinos (Figure 2). For instance, when the Philippine Statistics Authority (PSA) releases every quarter the growth in the Gross Domestic Product (GDP) and related measures, these statistics are sourced from monthly sample surveys of establishments, a quarterly survey of farms, as well as other data sources. Similarly, government statistics on unemployment and underemployment rates, released also by the PSA, are sourced from a quarterly survey, called the Labor Force Survey. The PSA’s inflation data is based on a monthly survey on prices. Other government agencies, such as the Bangko Sentral ng Pilipinas (BSP) also produce statistics. The BSP, for instance, tracks consumer confidence from its quarterly Consumer Expectation Survey.

In the private sector, market research and opinion polls are conducted to generate various measures that are important in the business sector and the general population, such as the monthly purchasing manager’s index (PMI) that is a leading economic indicator); measures of the satisfaction with products and services by consumers; approval ratings; voting preferences.

And we also have a number of surveys done by students and faculty in higher educational institutions to generate data for undergrad and graduate thesis, faculty studies. Educational institutions affiliated with hospitals also conduct survey research on health care.

What are the pros and cons of surveys?

There are a number of advantages for conducting surveys, including the relative ease of administration of surveys. A survey can be designed in less time (compared to other data-collection methods). Surveys can also be cost-effective, though the cost can depend on the survey mode. A survey can also be administered remotely via online, mobile devices, email, and digital interviews. During this pandemic, firms that have been regularly conducting face-to-face surveys, have started to experiment with the use of digital means of interviewing, and capturing data.

A survey is capable of collecting data from a large number of respondents. Numerous questions can be asked about a subject, giving extensive flexibility in data analysis. With the use of survey software, data can be readily tabulated especially as questions tend to be put on a closed format of categories, and advanced statistical techniques can be utilized to analyze survey data to determine validity, reliability, and statistical significance, including the ability to analyze multiple variables. A survey can also have the potential for generalizability particularly for probability surveys. We can be assured of the reliability of data generated if a survey is designed and done well.

But there are also disadvantages with surveys: some survey errors can result from deficiencies in coverage, non-response, question wording, interviewer effects, and social desirability. The reliability and accuracy of survey responses may depend on several factors. Respondents may not feel encouraged to provide honest and socially undesirable attitudes and behaviors and to over report more desirable attributes. Respondents may also not be fully aware of their reasons for any given answer to a question because of lack of memory on the subject, or even boredom. Surveys with closed-ended questions may have a lower validity rate than other question types.

Non-responses may seriously erode the reliability of aggregate result. The number of respondents who choose to respond to a survey question may be different from those who chose not to respond, thus creating bias. In a questionnaire, options to a survey question could be unclear to respondents. For example, the answer option “somewhat agree” may represent different things to different respondents, and have its own meaning to each individual respondent. ‘Yes’ or ‘no’ answer options can also be problematic, say when asking if someone is literate, that is can read and write in a certain language. Respondents may just keep answering “yes”. Survey questions can be inflexible and the results may lack depth, unless these are followed through with qualitative research interviews to probe on survey results. And finally, survey results can be misused and abused.

How are surveys designed and implemented?

Designing and implementing a survey include making decisions on how to identify and select a potential sample respondents. Some targeted respondents may be hard to reach (or reluctant to respond). Questions need to be evaluated and tested before large-scale survey operations. Decisions need to be made on the mode for posing questions and collecting responses. For instance, at the start of the pandemic, collecting data face-to-face was not allowed, and even now that it is allowed, there may be questions on whether we could rely fully on data capture thru digital means, when not everyone is digitally connected in the Philippines.

Also interviewers need to be trained and supervised. Data files, when put together, need checking for accuracy and internal consistency. Survey estimates may also need to be adjusted, to correct for identified errors, including non-responses.

The gold standard in conducting surveys has been to select respondents using chance methods so that they can roughly represent a proportion of the population, say, who are going to vote for a certain candidate, as in the case of the simple random sample illustrated in this second rectangle in Figure 3 where 20% were randomly selected. There are other designs, such as cluster sampling (which breaks the population down into groups, and then you sample from chosen clusters), or stratified sampling (in which a random sample is drawn from each of the strata), or a combination of cluster and stratified sampling, as is shown in Figure 3. Regardless of design, the sample results can be used for inference to the entire population.

Across the world, National Statistics Offices (NSOs) such as the PSA, when they conduct household surveys, typically select respondents in multiple stages, at least two stages. At a first stage, they divide the country into primary sampling units (PSUs), often villages, and select PSUs, then within the selected PSUs, they select their secondary sampling units, dwellings, or households. The sampling done by reputable polling firms is very similar in principle. Surveys of businesses, on the other hand, involve stratifying firms into large firms (all of which are interviewed), and micro- small and medium establishments (MSMEs), which are sampled proportional to size.

Why do opinion polls use a sample size of 1,200?

People are often surprised why polls only make use of 1,200 respondents to get a national reading of a proportion of voters who prefer some candidate, even if the voting population is 63 million Filipinos. Of course, some politicians may be the worst critics of polls because they think that the only numbers that matter are the counts on election day, especially if the poll results are not in their favor. I often tell graduate students that if they don’t believe sampling works, then on their next visit to a hospital for a blood test, would they be willing to provide the nurse all their blood, rather than a mere blood sample?

So why do polls make use of 1,200 respondents only when the targeted population, as in the case of the number of Filipinos, is in the millions? My FB friend suggested that a mere 10% of the total population would work as a good sample size. On the contrary, statistical theory suggests that when we estimate from a proportion of voters in a population, the estimate from a sample has a measure of precision, called the “standard error” that is dependent both on the true value of the proportion and the sample size. In particular, the standard error is inversely proportional to the square root of the sample size. In other words, to increase the precision twice, we would need to increase sample size by four. And if we want to control the margin of error to be 3 percentage points, which means we want to be near the true value within that margin, then we would need a sample size n of about 1,111, which rounded up to the nearest hundred is 1,200.

When a probability survey is conducted many times, the values generated for the proportion will vary, but will still be relatively predictable. The population size N has nothing to do with the determination of the sample size n. We would expect to see a less than 3 percentage point difference between the proportion of people who say “yes” to a survey question “will you vote for Isko” and the proportion of people in the population who would say “yes” if asked, when we use a sample size of 1,200.

We could increase the sample size to yield a smaller margin of error, but we have to do a cost-benefit analysis: identify whether the value added for increased precision with a larger sample size is worth the cost. For opinion polls, a sample size of 1,200 would do a fairly good job unless the elections are very close.

What could affect survey responses?

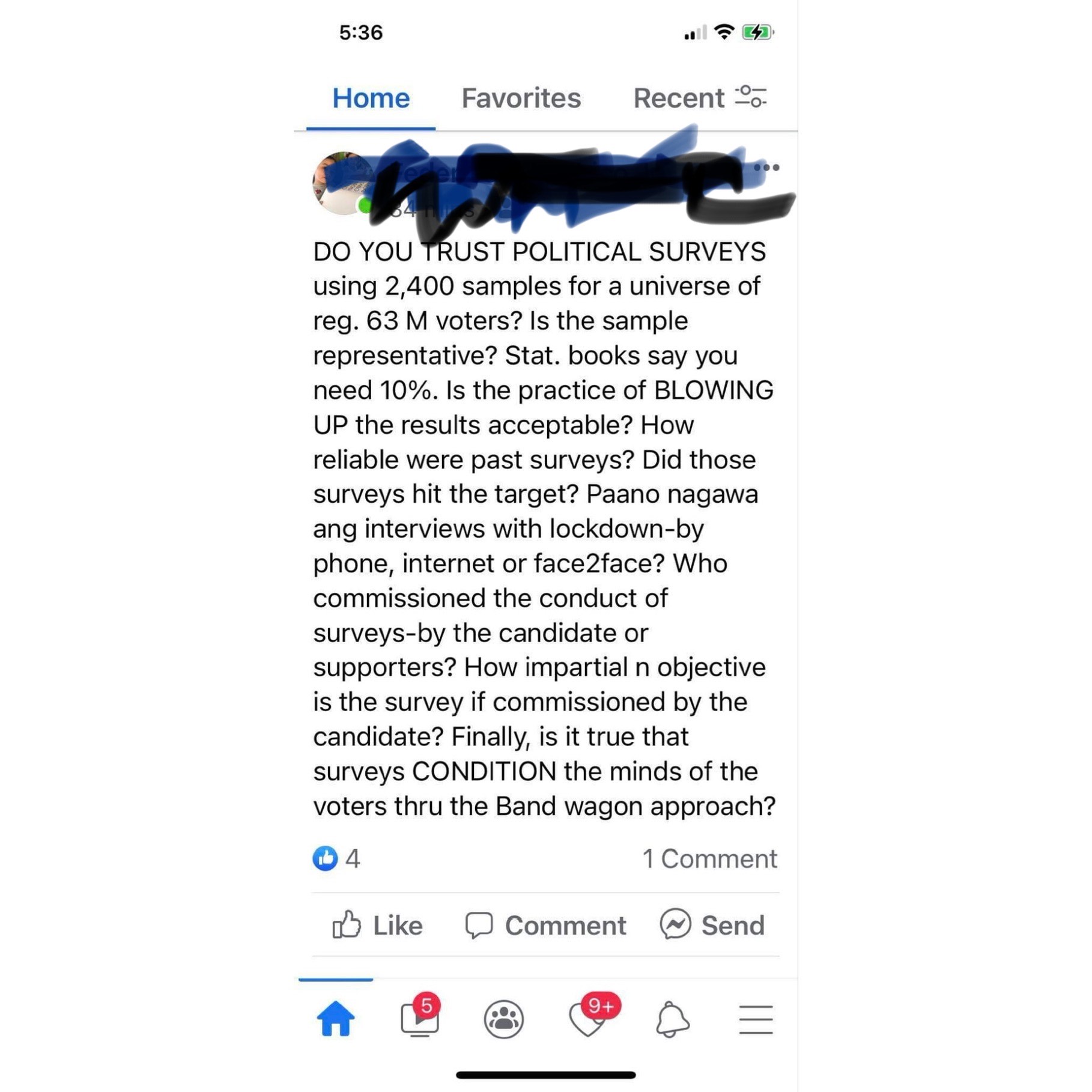

Aside from sampling errors, which result from the use of a sample rather than a full census to estimate attributes of a population, which can be minimized by having a bigger sample, there are also non-sampling errors (also called measurement errors) to contend with. And in the conduct of a survey, these can be potentially affected by six sources of measurement errors (Figure 4); (a) the questionnaire, which may be too complex; (b) the respondent, who may not want to be truthful; (c) the interviewer, who may influence the responses of a respondent; (d) the information system of a respondent, for instance when asking age, the respondent may have a defective memory, or may not be able to find a birth certificate to validate his age; (e) the mode of data collection, and even (f) the survey setting itself.

Are there inaccuracies in poll forecasting?

In various points in time in the history of the US presidential elections, surveys have provided clues on who would win, but there have also been disasters in forecasting elections. In 1936, the Literary Digest, a famed magazine in the United States, conducted a survey of around 2.4 million people. The Digest erroneously predicted that then-incumbent Franklin D. Roosevelt would lose to challenger Kansas Governor Alfred Landon 43% to 57%. Actually, Landon only received 38% of the total vote (and the Digest went bankrupt thereafter). At the same time, George Gallup set up his polling organization and correctly forecast Roosevelt’s victory from a mere sample of 50,000 people, but with a forecast that FDR would get 56% of the popular vote.

A post-mortem analysis revealed coverage errors arising from selection biases. The Literary Digest list of targeted respondents was taken from telephone books, magazine subscriptions, club membership lists, and automobile registrations. Inadvertently, the Digest targeted well-to-do voters, who were predominantly Republican and who had a tendency to vote for their candidate. The sample had a built-in bias to favor one group over another. In addition, there also was a non-response bias since of the 10 million they targeted for the survey, only 2.4 million had actually responded. A response rate of 24% is far too low to yield reliable estimates of population parameters. Here, we see that obtaining a large number of respondents does not cure procedural defects but only repeats them over and over again!

And of course much closer to the present times, in 2016, election forecasters like Nate Silver and even institutions like ABC News, and the New York Times put Hillary Clinton’s chance of winning at anywhere from 70% to as high as 99%, and pegged her as the heavy favorite to win states such as Pennsylvania and Wisconsin that actually went to Donald Trump. Four years earlier, Nate Silver gained fame in election forecasting, but he, like nearly everyone else, got it wrong. They falsely forecast a Clinton win as there was a systematic over-estimation in state polls for the Clinton vote. In short, what happened here was that people who responded to polls at the state level were not a representative sample of the voting population.

The voters that pollsters were expecting, particularly in some states defied expectations, i.e., they were not the ones that showed up to cast their vote.

Do polls have effects on public option?

Some people wonder whether the public can get manipulated because of survey results, joining a bandwagon, or giving your vote to an underdog, among others. This is why in a number of countries, laws or regulations attempt to control how opinion polls are reported or even whether they are permitted at all. In Montenegro, for example, opinion polls or any other projection of the election results are forbidden. On voting day, it is even forbidden to publicize the results of previous elections.

As far as evidence on effect of polls on public opinion, however, the long story cut short is that the literature on survey research is mixed, and this is why in the Philippines, even if we do have a law to regulate election surveys, we don’t ban opinion polls, which are viewed as part of freedom of speech.

What questions should we ask about opinion polls?

Because surveys have all of these errors, deviations from true values, which are better referred to as statistical or measurement errors (to differentiate these survey errors from simple mistakes), Darrel Huff in a 1953 book on How to lie with Statistics, Chapter 10 on How to talk back to a statistic, suggests that we should be asking five questions:

- Who says so? (who conducted survey? who paid for it?)

- How does he know (how was survey conducted?)

- What’s missing? (how many respondents were interviewed? what was margin of error? how were questions asked?)

- Did someone change the subject? (are descriptions of data and conclusions based on the data?)

- Does it make sense? (what assumptions are made in the survey report and analysis?)

What innovations in surveys amid COVID-19?

We will discuss all this again in the next part of this series in the context of our regulations on election surveys. But let me close this first of three parts of a series of articles by also mentioning that the pandemic changed much of our ways of doing things, even collecting data.

Surveys have traditionally been administered in the Philippines through face-to-face (FTF) mode (which has its advantages and disadvantages, (see Table 1), but there are a lot more ways of administering interviews, some involving low data collector involvement, such as e-mailing the questionnaires, or now making use of artificial intelligence robots on social media.

In FTF interviews, a trained interviewer meets in the home of a respondent, who is being asked standardized questions and whose answers are being recorded (for instance with pen and paper, but now directly on tablets for direct data capture). While interviewers can build rapport and motivate respondents to answer conscientiously and disclose sensitive information during FTF interviews and can observe the respondent’s nonverbal language and circumstances, in FTF mode, interviewers intrude into people’s homes and schedules, spend time and resources traveling, and must be non-threatening to respondents as they probe about potentially embarrassing opinions and behaviors.

Respondents must allow a stranger into their homes, must be prepared for a social encounter, and may not be able to multitask while answering, and even may feel embarrassed to respond to socially undesirable questions such as did any member of the household ever experience physical or sexual violence from another member of the household, or is anyone doing drugs? And amid COVID-19 lockdowns, FTF surveys were not allowed especially in 2020.

Results of several recent studies, e.g., Schober (2018) suggest that for some respondents, asynchronous interviewing modes that reduce the interviewer’s social presence and allow respondents to participate while they are mobile or multitasking may result in higher quality data, greater respondent disclosure and satisfaction. But the problem remains that not everyone in the Philippines is digitally connected, so at best we would get only the pulse of the upper 60% of the socio-economic spectrum.

More to be discussed in the second and third parts of this series. – Rappler.com

Dr. Jose Ramon "Toots" Albert is a professional statistician working as a Senior Research Fellow of the government’s think tank Philippine Institute for Development Studies (PIDS). Toots’ interests span poverty and inequality, social protection, education, gender, climate change, sustainable consumption, and various development issues. He earned a PhD in Statistics from the State University of New York at Stony Brook. He teaches part-time at the De La Salle University and at the Asian Institute of Management.

Add a comment

How does this make you feel?

![[WATCH] In The Public Square with John Nery: Preloaded elections?](https://www.rappler.com/tachyon/2023/04/In-the-Public-Square-LS-SQ.jpg?resize=257%2C257&crop=414px%2C0px%2C1080px%2C1080px)

![[Newspoint] 19 million reasons](https://www.rappler.com/tachyon/2022/12/Newspoint-19-million-reasons-December-31-2022.jpg?resize=257%2C257&crop=181px%2C0px%2C900px%2C900px)

![[OPINION] The long revolution: Voices from the ground](https://www.rappler.com/tachyon/2022/06/Long-revolution-June-30-2022.jpg?resize=257%2C257&crop=239px%2C0px%2C720px%2C720px)

![[OPINION] I was called a ‘terrorist supporter’ while observing the Philippine elections](https://www.rappler.com/tachyon/2022/06/RT-poster-blurred.jpeg?resize=257%2C257&crop_strategy=attention)

![[WATCH] In the Public Square with John Nery: The new pollsters](https://www.rappler.com/tachyon/2024/01/In-the-Public-Square-LS-SQ.jpeg?resize=257%2C257&crop=398px%2C0px%2C1080px%2C1080px)

There are no comments yet. Add your comment to start the conversation.